A little belated, here is the video for the project Great Heart, created by Jim Schmitz and myself as a collaboration and presented as a final for our Phys Comp class as well as my ICM class last semester, and was also featured in the Winter Show 2017. We are still planning to take this to an art or performance context some day, to create a more immersive environment using the same concept. In the meantime, this is a shorter and more digestible explanation of what it does!

Comp Media

Day 98 @ ITP: Phys Comp + ICM

The time has come! Our final class for Physical Computing is tomorrow. Jim Schmitz and I have been working on this project since we were assigned to work together on the midterm project in October. We have spent a total of around 10 weeks of conceptualizing and prototyping, coding, fabricating, troubleshooting, rethinking, reworking, and ultimately finalizing this second phase of our idea. I think there are various directions this project could go in should we choose to do so, and manifestations it could take in general as an idea, and many ideas that will come of having worked on it potentially for other things I do after this (I can't speak for Jim here, but I hope it is the same case for him as well!)

Today we met to finalize a few details which were keeping us from being 100% finished with the goals we had in mind for the final presentation. It was pretty down to the wire time-wise, but the last things we wanted to add though seemingly small are additions were ones that we felt would add infinitely to the user experience and conveyance and reception of the meaning of our project to someone who doesn't have us there to explain it to them. We updated the code to read the beats per minute, through registering the time it takes for a full heart beat sine wave to register, then repeating it (like a "stamp"). We decided to do this based on the feedback I got while presenting to my ICM class from Allison and my classmates about how it was distracting that the sensor would miss beats, and how it would not be unethical to fill in the missing beats through approaching it in a different way. With our new setup, once the sensor gets a new piece of information about a heartbeat it continues to play the sounds at that rate until it gets a new piece of information, which updates around every five seconds. This way we are able to provide the user with a consistent heart rate sound, which is ultimately more calming (some people were starting to worry if there was something wrong with their heart). With this method we are able to keep it steady without sacrificing the validity of the data that we are showing them/playing as a sound for them. As it is, it tracks heart rate and whether or not it is lowering, with a slight delay. The notes and flashing heartbeat colors in the visualization play at a rate set as 1 minute (or 6000 milliseconds) divided by the beats per minute, which consistently updates the rate at which the sounds are being played:

We also added a "Challenge" version, which uses this new heartbeat bpm data to track when the user's heart rate has lowered by 10%. At the end of both the "Challenge" and "Duration" options the user sees their initial pulse rate next to their final pulse rate at the end of their session.

Here are some screenshots of our current visualizations:

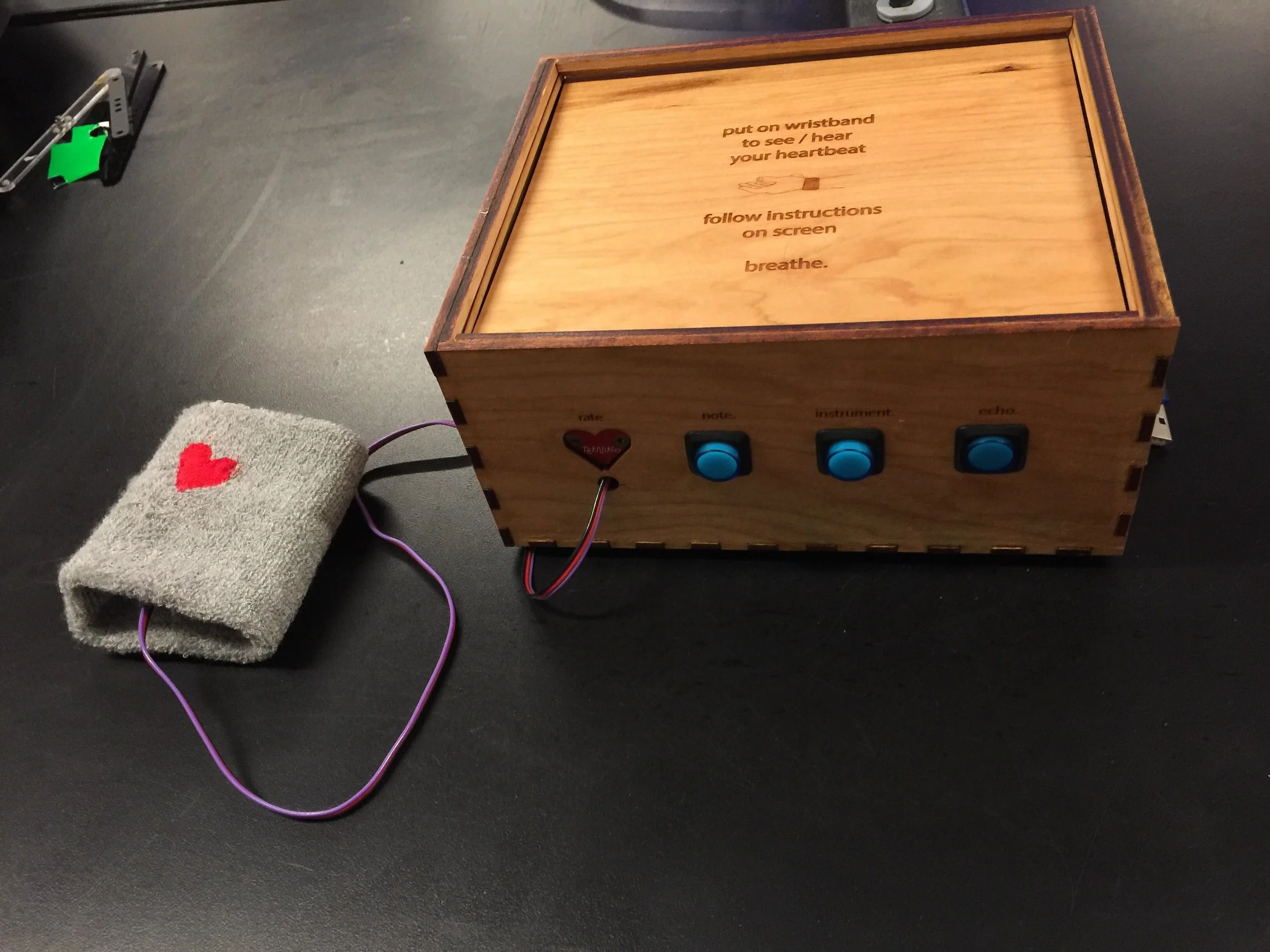

(Jim sewed a heart onto the new wristband!)

Great Heart enclosure version #2

We also recorded Jim's voice for the audio guide. We were planning to record our classmate but something came up. Maybe we will record other versions in the future if needed! And ask her again. But I think Jim's voice was actually very calming, and appropriate since we've been working on it. We decided to use a male voice also because in general voices in technology are female, though I'm not sure about instructions... In any case, we now have an audio guide, which I will also attach here:

Welcome to Great Heart.

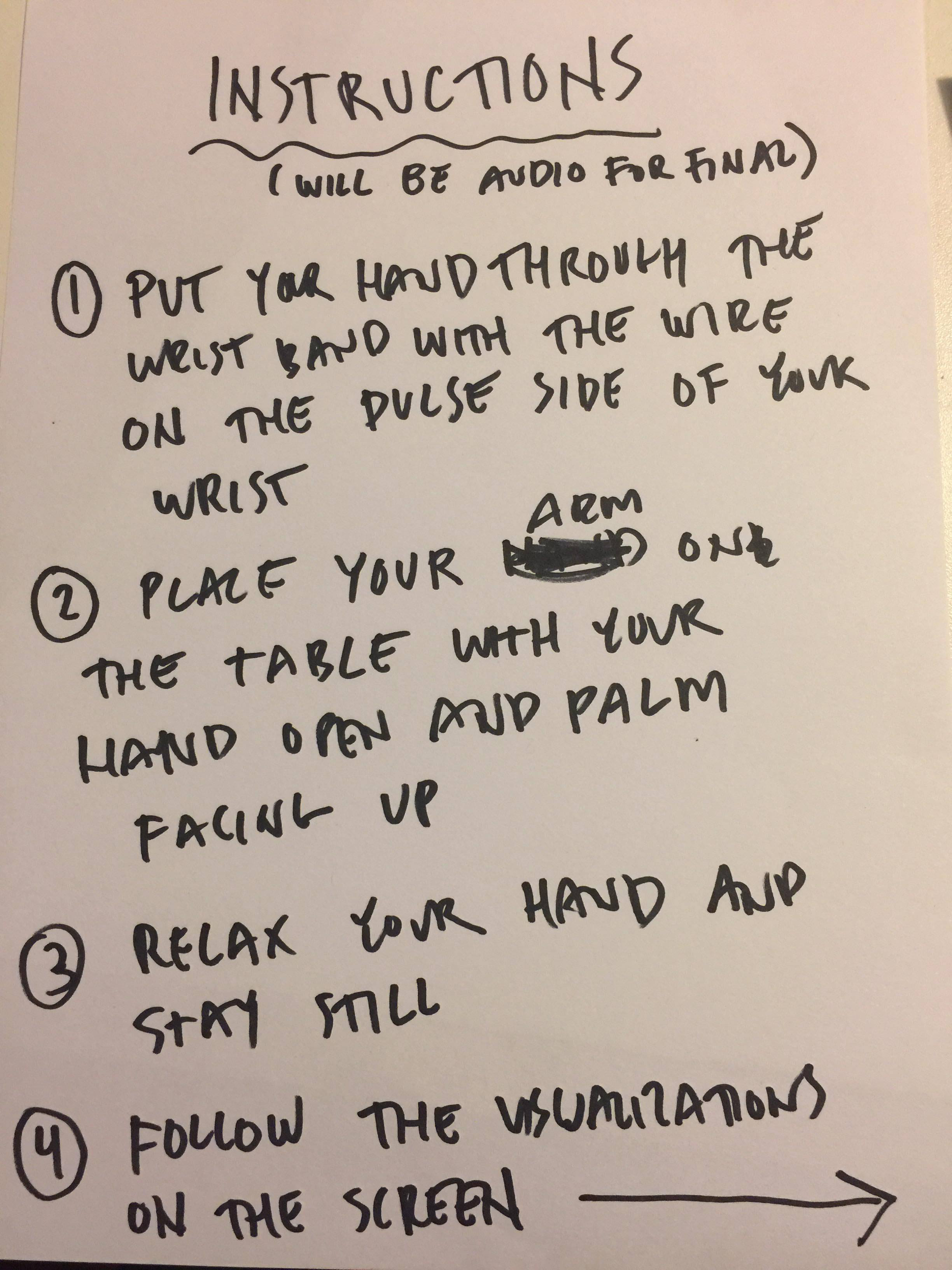

Using your left wrist, carefully put on the wristband to see and hear your heartbeat, with the heart placed over your pulse. This is where the sensor is reading your heart rate.

In a moment you will hear notes in sync with your heartbeat. Feel free to adjust the sound using the blue buttons on the enclosure.

Lay your left arm on the table, palm facing up, and hand relaxed.

Take in a deep breath through your nose, if you are comfortable.

Press either button to start.

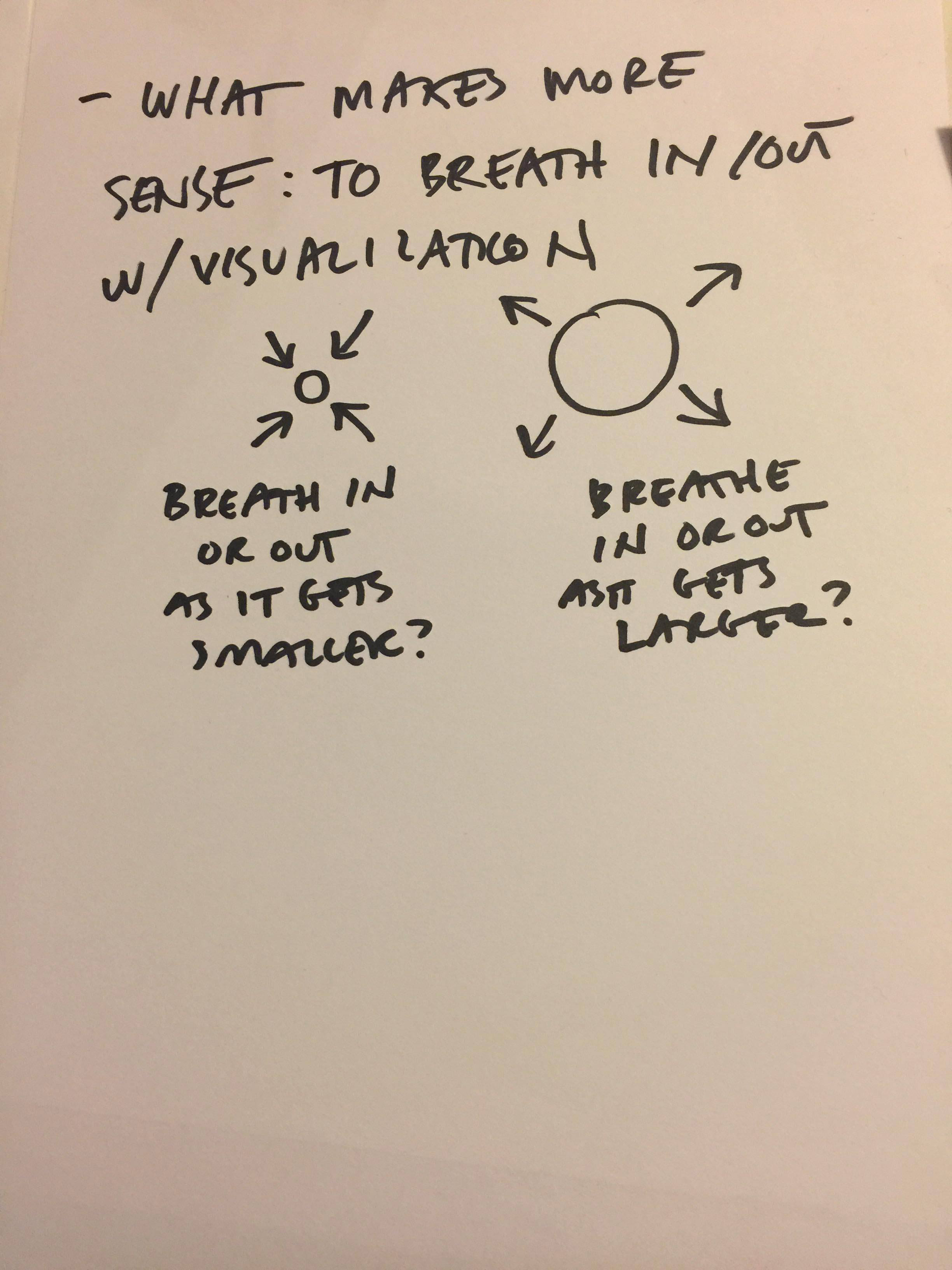

Choose a visualization on the screen, and breathe in and out deeply as the shapes gets bigger and smaller.

Breathe in: ...2...3...4 (Breathing sound in through nose)

And out: ...2...3...4 (Breathing sound out through nose)

Tomorrow we plan to project Great Heart through the classroom projector as well as plug into the classroom speakers. It should be fun to see and hear it big (as long as everything goes as planned)! We also found out that the project will be in the winter show which starts this weekend. There it will be displayed with headphones and a monitor. It's been very educational and inspiring to work on this up until this point. Further documentation to follow...

The code for our project can be found on GitHub here.

Day 96 @ ITP: Phys Comp

Yesterday I came to ITP and laser cut two more enclosures at a larger size (and failed to take photos of the process, but will take more later of the finished project). I made two because the wood was curved and I wasn't sure if they all would fit. It turned out that was true -- Ben Light was right, these boxes are tricky! However it still seemed like the best option for me at the moment to make sure it would stay together.

In the afternoon Jim and I met up to make some new breathing visualizations. We added a "breathing rectangle" that grows up and down the screen, and a polygon that unfolds open with your breath cycle. We also beautified the "gui" or computer program pages he had started in P5. He also showed me how to "beautify" my code! Which is also helpful to find mistakes or places where you forgot a bracket or where it is broken.

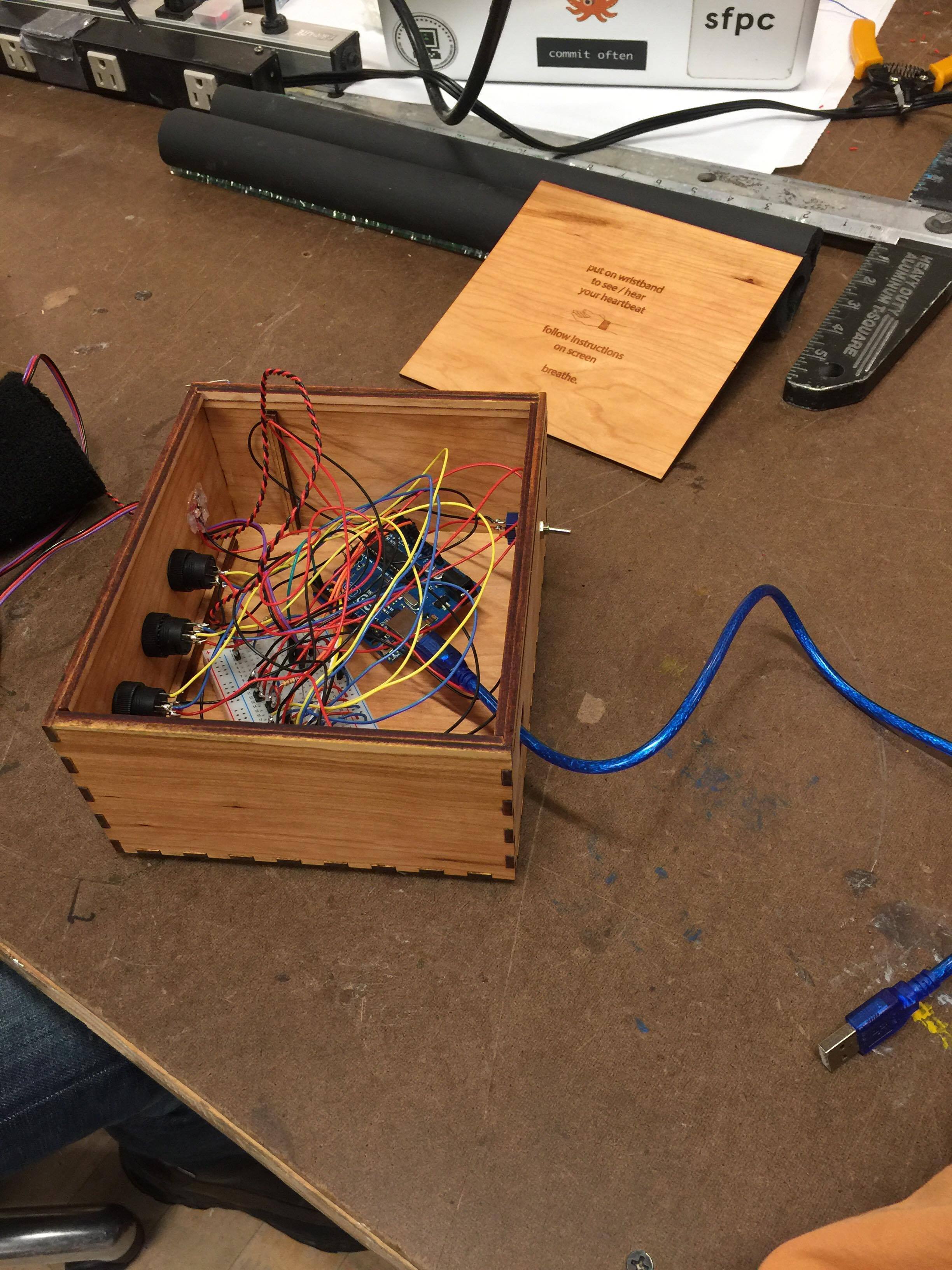

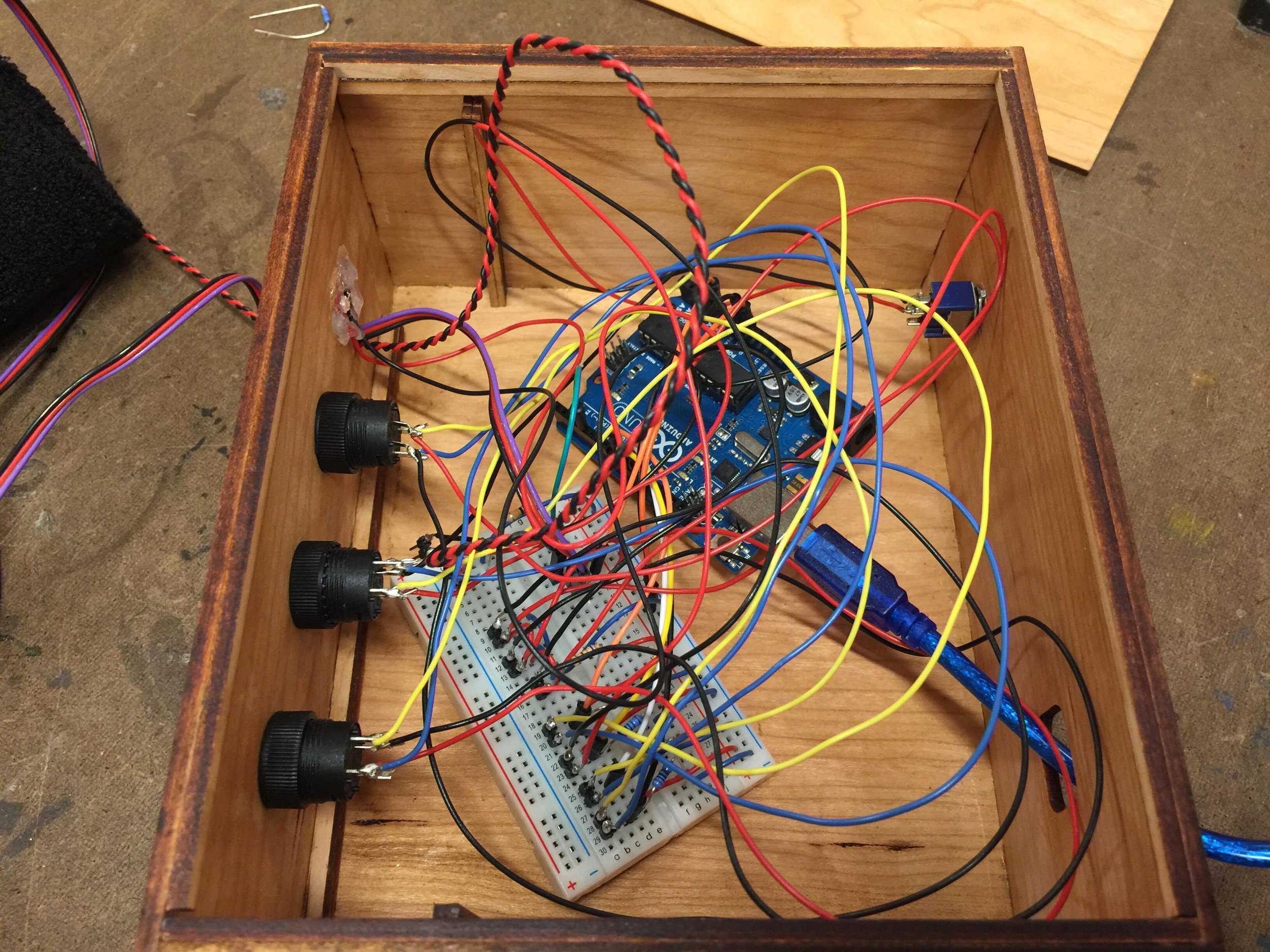

Today we met again and wired up the new enclosure. Everything fit, however I think the enclosure could still be improved upon, maybe if I use flatter wood and also take my time more with glueing, as it was a bit of an ordeal trying to glue it last night, partially bc my plan was not thought out and I assumed it would be simpler than it turned out to be. I got some glue marks all over it which I think I could avoid in the future by removing excess glue with a dry cloth.

It was helpful to wire up the new enclosure because it reminded us of how we organized the wiring on the Arduino/Breadboard, and also made it clearer how we would repeat it in the future if needed, and what we would change.

We also attempted adding a heart LED onto the wristband which would also blink with the user's heartbeat, but for some reason that is not working yet, maybe because the LED is broken? We will troubleshoot later.

Next up we will:

- Meet with Tom tomorrow afternoon for feedback before we continue.

- Troubleshoot the heart LED on wristband

- Update the heartbeat detection algorithm with Jim's reworked code to read the heart rate intelligently guess where missing heartbeats would go, to make the sound more consistent.

- Possibly add an option to view the heart rate.

- Have the dropdown menu show up when viewing the visualizations.

- Finish coding the "challenge" vs "duration" options and how those will work.

- Record audio guide

Day 96 @ ITP: ICM

I decided that my "musical sandboxes" project is something that maybe I will continue to work on over the whole course of ITP on my own or in classes, and I would like to refine different ways of making it in code or as a combo of software/code/physical objects. But for this semester since I am also working simultaneously on a project for Physical Computing that is using P5, it seemed to make most sense to focus on that and combine both finals and get the most out of that project, since it is also one that I am very interested in.

Recently we updated the code to add more breathing visualizations using P5 that I will screen capture and share here for this upcoming Wednesday. We also created a kind of "computer program" in P5 which I did not show at the final ICM presentation as it was not finished yet, which has an intro screen that leads you to the different visualization options, and a final screen that thanks you for participating before clicking back to the beginning. Using the basic infrastructure of this program, and the things I've learned this semester in ICM, I think I would be able to combine the code I worked on with Jim with various ideas and will definitely be referring back to the code we worked on together for future projects (and possibly for different iterations of our project).

Right now it is very helpful to have a modular system that is easily changeable, and to manage it in separate .js sketches so that we don't break our code. I'm learning about organization of code and proper coding habits, and the trick after this will be to combine it with all I learned is possible to do with coding in ICM, and make different kinds of projects and keep it up. It really does feel like learning a language and as with any language there is a hump that you have to get over before it starts to feel more like second nature.

Day 91 @ ITP: Phys Comp + ICM

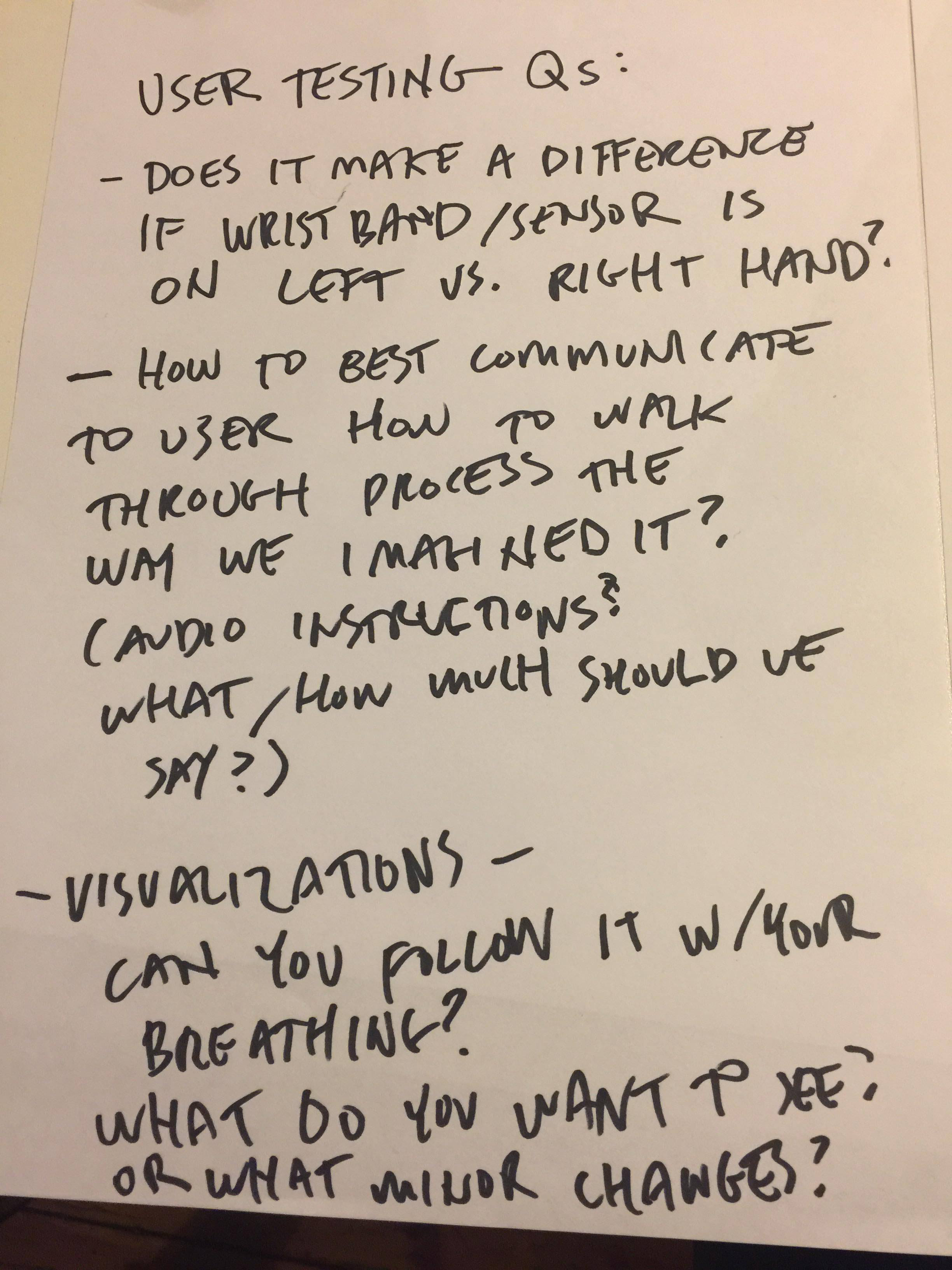

Great Heart — Questions for User Testing

Screen shot of the breathing visualization #3 w/ blue ring (between heartbeats)

Breathing visualization #3:

blue ring + pink orb which triggers w/ heart beat

Today Jim and I got together and coded the visualization with some new images, and made a video of our project for applying to the 2017 winter show. For now there are 3 visualizations. I will also be showing this project as my final presentation in my ICM class to share the part using P5 and also get some I'm sure valuable feedback there on the interaction...so far I have sat next to Jim and coded/typed while he explained what to do, which was extremely helpful for me-- and I hope also helpful for him to think about how it needed to be coded too? Not sure. He was very patient though. But it definitely helped with my muscle memory with coding to remember how to indent lines and add semicolons properly and put parentheses nested in the right way, etc. And also about using classes to organize code into sections, objects, and in general about simplifying code to the least amount of lines, and the syntax for that, etc. Jim's coding is very neat and simplified. I can't say that I would be able to recreate it or the math that is involved sometimes but it is starting to make sense to me, and it was nice of him to let me type. He explained how everything worked very well. At times I was able to fill in new parts on my own and other times I was pretty sloppy and left out brackets, etc, things I would not have caught for a while. So it was definitely very helpful to work with Jim on this (!) But I think we also worked well together, and had equal parts in conceptualizing it, and also both worked on fabricating it. Overall I am very proud of it and am interested to see what kind of reactions or suggestions people have!

Day 78 @ ITP: ICM

Three beginnings for the musical sandbox idea:

http://alpha.editor.p5js.org/full/rJLIkC7gM (<-- this one not working for some reason though it was working in the editor...)

http://alpha.editor.p5js.org/full/rJ08A9XlG

http://alpha.editor.p5js.org/full/r1liJj7gf

Currently they all have the same sounds and images but they will vary...

Next step is to make them drag around and pan the sound on x-y for one of them or have it somehow change the overall sound depending on where it is on the canvas, and meet with Aarón again to get some help with the code again next week...It was nice today how I was able to complete a bit more of the coding on my own after seeing how something is done or watching him code it and creating html objects.

Also not sure about keeping these shapes, they were just for testing, but for some reason they are growing on me. I was thinking of using a color from each photograph as the color of the shapes and keeping it simple.

I also like the idea of doing many different iterations of the same kind of combination of things.

Day 69 @ ITP: ICM

Update on final project:

I would like to make three audio-visual sandboxes using these images as canvases, which are photos of 35mm prints I found at my parents' from Chile when we lived there circa 1995 or 1996...the last one is of our backyard in Vitacura. I just really like the colors and would like to see how they would look slit scanned in the backgrounds of these sandboxes. I also feel that for what I am imagining just one image repeating will be enough for these, except maybe would like to change exactly how it scans each time even if the source images do not change.

Day 64 @ ITP: ICM

Final project proposal:

For my final ICM project I plan to make 3-5 audio-visual collages that can be manipulated by the viewer. I would like the viewer to be able to change them visually as well as sonically.

I am trying to keep my expectations for this within reason so I can code them pretty much myself with some help but my goal is to have mastery over the basic elements of code involved here for these so that I could replicate more of them and potentially add onto this idea later and develop it.

I'm imagining a canvas for each, which may be a video going through the slit scan effect from the last assignment, if it's possible to put images on top of a video in the background(?), and some elements in a scene, probably abstract ones, that can be clicked on then dragged around to sort of "collage" or arrange them. Meanwhile a background song or audio layer would be playing, and when the viewer clicks on each element, a new layer of audio would be introduced or taken away, or a one time audio sample would be triggered. I would also like to have one element in each sketch that would add some subtle audio effect to the sound when it is dragged in an x-y axis. This interaction may be pretty simple but hopefully fun tool for interacting with a piece of music.

I got some feedback in class to check out Bjork's ReacTable-- here it is, to watch later!

Note:

For my Phys Comp final I am working with my partner in class to create a visualization of breathing exercises in a sort of computer program which we plan to make using P5, to be used for meditation, along with a sonification of the user's heartbeat which is triggered from a pulse sensor using an Arduino. We will also be creating a sound library of samples using P5 which will be triggered by the heartbeat data coming from the Arduino. So I will be working in P5 for that as well, but some of the math is beyond my capabilities in the code. However I think I will learn a lot and it will be fun to brainstorm the types of interactions or interface we can code using P5 as an engine.

Update on 11/13:

I wanted to add my plan for completing this project. I went back and forth between thinking that I should just focus on the P Comp project and my role helping with P5 for that instead of doing a separate final for ICM, but I think that I could create at least 3 of these musical sandbox ideas for the final, and would feel good about doing that. It would also be more based on code that we learned in this class. This is how I plan to do it, and I am scaling back what I will do based on the fact that I will not have all that much time:

For Nov 15:

Gather images and sounds for compiling/working with for 3 different compositions. Keep them simple. Some loops and some individual sounds (maybe this can be in an array so I can switch which one gets triggered?) Choose whether there will be video in the background or just one slit scanned image in the background that repeats and maybe changes how it repeats upon refreshing (or not)...

For Nov 22:

No class.

Meet with Aarón for advice. Maybe focus on the interactions between the sounds based on where they all are on the canvas. Get an image slit screening in the background and place images on top to drag around and trigger sounds with (ambient loops, and one hits in an array).

For Nov 29:

Come up with a pleasing interaction between the sounds. Play with different images and sounds. Figure out what is not working if anything and make a plan to debug it.

For Dec 6:

Do any debugging. Meet with a resident for help debugging if there are any issues. Tweak the visuals and sounds as needed (think of cooking like salt and pepper at this point). Think about the interaction. Is this a way to showcase music or to make music? Do a blog post.

For Dec 13:

Prepare final presentation. Demonstrate how it works and have people in class play with it maybe as part of the presentation if they would like?