Day 51 @ ITP: Intro to Fab

Audio Mixing screen shot for a Radio Lab episode

Day 50 @ ITP: Robert Krulwich (Radio Lab)

Day 49 @ ITP: Phys Comp

Week 8

Midterm project w/ Jim Schmitz

We ultimately ended up using software recommended in the book "Music and Audio Projects for Arduino" to change the voices using Jim's laptop, after realizing that sending MIDI commands to a software synthesizer like Omnisphere is a little more tricky to work with. However, we got the basic MIDI commands to work! And with the basic software it works exactly as it should. He explained the method he used to me as we went along so that I could understand it as fully as possible and also explain it when we do our demonstration in class for the presentation.

Basically, using a graphing method that can also be applied to changing stock prices or financial information, Jim calculated a "moving average" in order adjust to people's varying heartbeat graphs. The moving average takes in the newest information with a weighted average, thereby giving more importance to new data, and making it affect the average more heavily. It tracks heart rate data for a matter of a few seconds before throwing away old data, giving the newest data the most weight (0.9, for example) and the oldest heart rate data the least amount of significance (0.1). This allows the Arduino to detect that a heart beat is about to spike when it starts to move a certain amount above its average, and then send a MIDI signal to the software synthesizer to play a note each time the heart beat spike rise is detected. The code also tells the note to stop playing a certain amount of time after it starts. When the moving average starts to go downwards, the code sends a MIDI command telling the software synthesizer to reset and start again to wait for a new heartbeat. The code also tells it through a MIDI command to change the key within a certain range (50-62), and also change the voice (within certain voices we chose out of the bank provided by the software synthesizer that works well with Hairless MIDI Serial) and also add sustain (a basic MIDI command). The way MIDI works is that the first byte sends the command, and the second byte tells it what to do. So, for example, if the byte starts with 1, it says to do *something*, such as play a note. The next byte says what note to play, and the volume. The Voice button, however, only sends one command - to change the voice (until it exceeds the "voice list" length, and which point the code tells it to return to the beginning of the list of sounds).

The math for the heartbeat detection study we did for this project is thoroughly documented on Jim's blog here.

Here are some audio samples from both us experimenting with meditating using the Midi Meditation (using two different sounds in Omnisphere):

And some videos of us interacting with our prototype...

DATA (USER TESTING)

1) Below are graphs showing our first user testing graphs (without the moving average applied):

2) User testing graphs with the moving average applied (to show how the code will detect the varying heartbeats using the moving average):

I think what really brought this project together was that we came up with an interaction that we were actually excited to work with and use ourselves. It also helped (a lot!) that Jim had a background in working with moving averages, which allowed the project to work more how we imagined and essentially allowed the interaction to come to life. Our original idea of having the heart rate trigger the tempo of a piece of music or notes within a scale to create music changed during brainstorming for the project to the idea of triggering a single sound in time along with a person's changing heart rate, and that seemed to make the interaction more interesting to us and for others as well...I think our only hitch was working with the Omnisphere software that made it more difficult and slowed us down somewhat, but as it ended up when using the other software it worked just as we intended. I also learned some code, and about how MIDI works. I actually think I now understand how each MIDI command worked and how we programmed the buttons, and also some things clicked when wiring the bread board and soldering for the first time.

We realized also that it was actually helpful to have the heart rate sonified this way, or calming, as this interaction and sound in effect reminds you to be more aware of your actual heart beating. And in this version it is also more conducive to using for meditation or experiments with meditation.

Day 49 @ ITP: Video and Sound

Installations (from Syllabus) for reference :

JACQUES TATI’S PLAYTIME

ISAAC JULIEN / PLAYTIME

William Whyte, The Social LIfe of Small Urban Spaces

PIPILOTTI RIST / POUR YOUR BODY OUT

https://www.youtube.com/watch?v=V-9fxFAmxVk

https://www.youtube.com/watch?v=lL3NJdxfrAANATALIE BOOKCHIN / TESTAMENT

MARCUS COATES / DAWN CHORUS

https://www.youtube.com/watch?v=PCCpnDtgxXk

https://www.youtube.com/watch?v=RsFdO_kvfIMMIWA MATREYEK / (TED TALK)

JAN SVENKMEYER / ALICE

BROTHERS QUAY /SEMICONDUCTOR FILMS/ EARTHWORKS, WORLDS IN THE MAKING

ISABELLA ROSSELLINI / GREEN PORNO: EARTHWORM

RACHEL MAYERI, PRIMATE CINEMA

Day 48 @ ITP: Phys Comp

Week 8

Midterm project w/ Jim Schmitz:

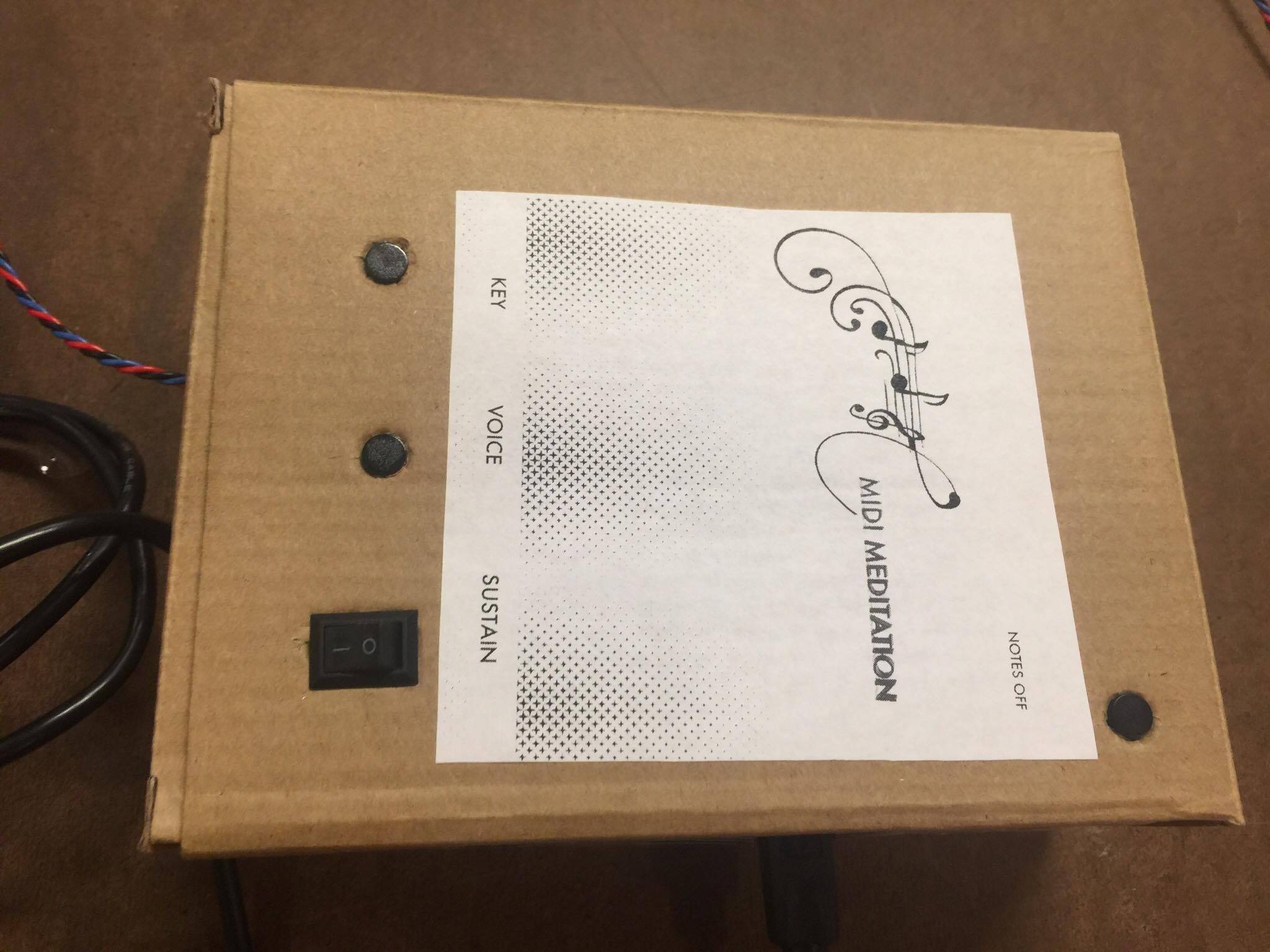

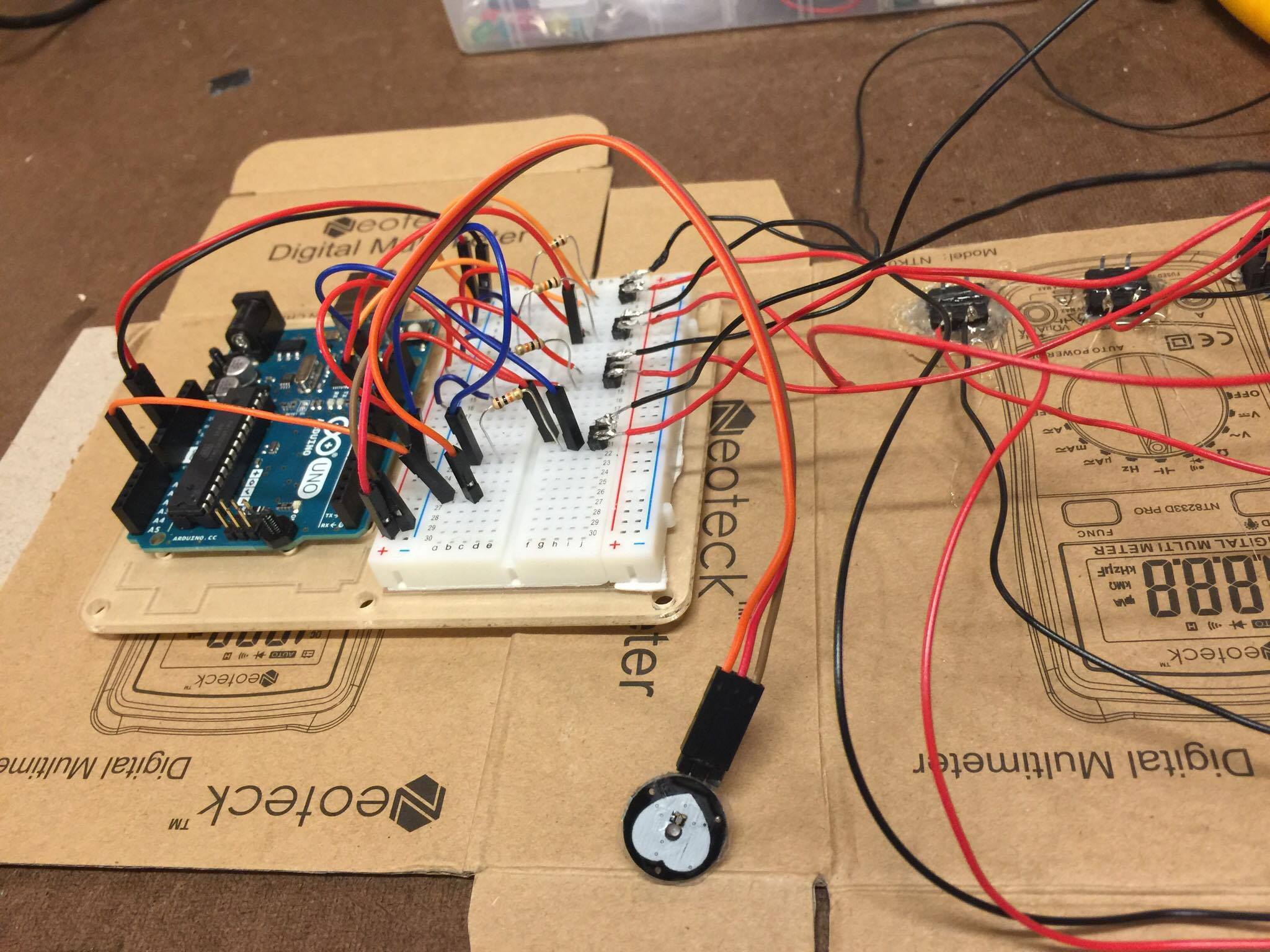

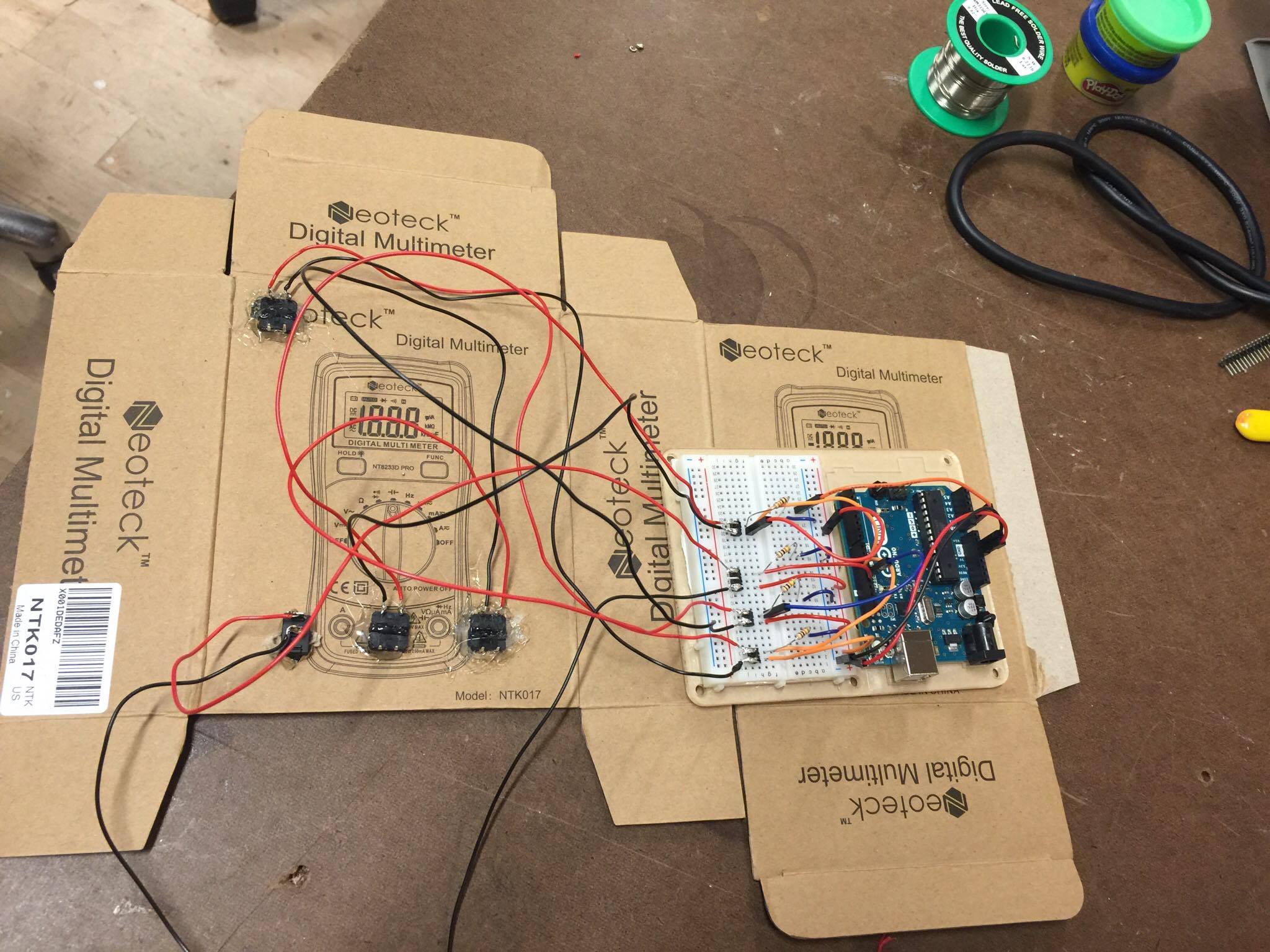

Yesterday we soldered the buttons to the cardboard box that we are using as an enclosure, and today we finished putting it together. We also designed a label prototype, printed it on the ITP printer and glued it on. We braided a longer cord for the pulse rate sensor, so it can now be held as far as around 4 feet away from the instrument. I was thinking then it could also potentially be used on different parts of the body or pressure points. The enclosure has 3 toggle buttons and one switch that all trigger different MIDI commands to change: the key (button #1), instrument (button #2), add sustain (the switch), or to turn off all sounds (button #3 at the top right). Everything works through the "MIDI Hairless" software which was recommended in the book we are reading for this project, but is not working entirely yet using Omnisphere on my laptop, which is where we want to play the sounds from...we are having issues particularly with the program change or "voice" button to change the instrument, but I think if we redo the code in some way we can get it to work to toggle through different sounds...it just isn't working the way we currently have it coded. So tomorrow we will look at that before the presentations on Wednesday. We also plan to look into getting it working for at least both mine and Jim's heart rates (my heart rate graph comes out low, his is higher) and will also experiment meditating with it! And go over explaining the code and how it works so that I can explain that part on Wednesday. I actually think that Jim did a really good job explaining it and hope I remember it correctly. At the beginning he explained a lot and and towards the end of programming the buttons I was adding them myself, adding the variables and sending the MIDI commands and telling it which pins to communicate with for each button. I think before it was kind of seeping it but it took working on something like this to get some things to stick. And I soldered for the first time this weekend. I'm quite happy with the concept of our project and hope we can get it to work the way we intended. I also would like to record some audio and/or video from the MIDI Meditation device to share here...

Current code:

// define user constants

#define midiChannel (byte)0 // Channel 1

#define SPAN 300

#define VOLUME 127

// declare variables

float valEMA;

float alpha;

boolean beat;

boolean sustainOn;

boolean noteOn;

boolean voiceButtonOn;

boolean noteButtonOn;

boolean offButtonOn;

long noteOffTime;

const int sustainPin = 13;

const int voicePin = 9;

const int notePin = 12;

const int offPin = 10;

int voiceNumber;

int noteNumber;

int voiceList[] = {1, 2, 3};

void setup() {

Serial.begin(115200);

pinMode(sustainPin, INPUT); // configure sustain for reading later

pinMode(voicePin, INPUT); // configure voice for reading later

pinMode(notePin, INPUT); // configure note for reading later

pinMode(offPin, INPUT); // configure off button for reading later

// initialize variables

valEMA = 512;

alpha = 2.0 / (SPAN + 1);

beat = false;

noteOn = false;

noteOffTime = 0;

sustainOn = false;

voiceNumber = 0;

voiceButtonOn = false;

noteNumber = 50;

noteButtonOn = false;

offButtonOn = false;

}

void loop() {

// read heartbeat monitor

int val = analogRead(A0);

// update moving average

valEMA = alpha * val + (1 - alpha) * valEMA;

// is the new reading significantly above the moving average?

// if so, this spike means the heart is beating

if (val > valEMA + 75) {

// have we not already detected this beat?

if (!beat) {

// note on

commandSend(0x90, noteNumber, VOLUME);

// schedule time to turn off note

noteOffTime = millis() + 200;

// update state variables

noteOn = true;

beat = true;

}

// has the current reading dropped down below the moving average?

// if so, this beat has ended

} else if (val < valEMA) {

beat = false;

}

// if the note is playing and the clock time is after the scheduled time

// for turning off the note, turn it off

if (noteOn && millis() > noteOffTime) {

// note off

commandSend(0x80, noteNumber, VOLUME);

// update state variables

noteOn = false;

}

// TODO: read other buttons and compare to state. send MIDI messages where necessary

int sustainRead = digitalRead(sustainPin);

if (sustainOn == false && sustainRead == HIGH) {

commandSend(0xB0, 64, 127);

sustainOn = true;

} else if (sustainOn == true && sustainRead == LOW) {

commandSend(0xB0, 64, 0);

sustainOn = false;

}

int voicePress = digitalRead(voicePin);

if (voiceButtonOn == false && voicePress == HIGH) {

voiceNumber++;

if (voiceNumber >= sizeof(voiceList) / 2) {

voiceNumber = 0;

}

commandSend(0xC0, voiceList[voiceNumber]);

voiceButtonOn = true;

} else if (voiceButtonOn == true && voicePress == LOW) {

voiceButtonOn = false;

}

int notePress = digitalRead(notePin);

if (noteButtonOn == false && notePress == HIGH) {

noteNumber++;

if (noteNumber > 62) {

noteNumber = 50;

}

noteButtonOn = true;

} else if (noteButtonOn == true && notePress == LOW) {

noteButtonOn = false;

}

int offButtonRead = digitalRead(offPin);

if (offButtonOn == false && offButtonRead == HIGH) {

commandSend(0xB0, 120, 0);

offButtonOn = true;

} else if (offButtonOn == true && offButtonRead == LOW) {

offButtonOn = false;

}

// pause 10 ms

delay(10);

}

/*

Send MIDI command with one data value

*/

void commandSend(char cmd, char data1) {

cmd = cmd | char(midiChannel);

Serial.write(cmd);

Serial.write(data1);

}

/*

Send MIDI command with two data values

*/

void commandSend(char cmd, char data1, char data2) {

cmd = cmd | char(midiChannel);

Serial.write(cmd);

Serial.write(data1);

Serial.write(data2);

}

Day 48 @ ITP: ICM

Week 8

Using APIs:

I tried a test code using the # of astronauts in space where every time you open it it moves, which was from a Shiffman tutorial... http://alpha.editor.p5js.org/ivymeadows/sketches/rJZbUM26b

I would like to try something with more constantly changing data, but this is what I got to work to start. Tomorrow I would like to work on somehow assigning the data to make images appear, which then can get moved around randomly upon refreshing. And also soon would like to learn how to make an image appear on the screen and disappear when you click it...or also maybe be dragged around the screen then relocated in order to sort of collage or compose an image. It was interesting to read a bit about APIs and I need to think about how I would want to use them...

This is a weird thing I made sort of just through thinking about APIs. I made it through an array though but would like to learn how to make images appear from pulling data through an API...

Day 44 @ ITP: Video and Sound

Week 7

Final video project:

The Craftsman and the Craft

By Alexandra Lopez . Camilla Padgitt-Coles . Michael Fuller