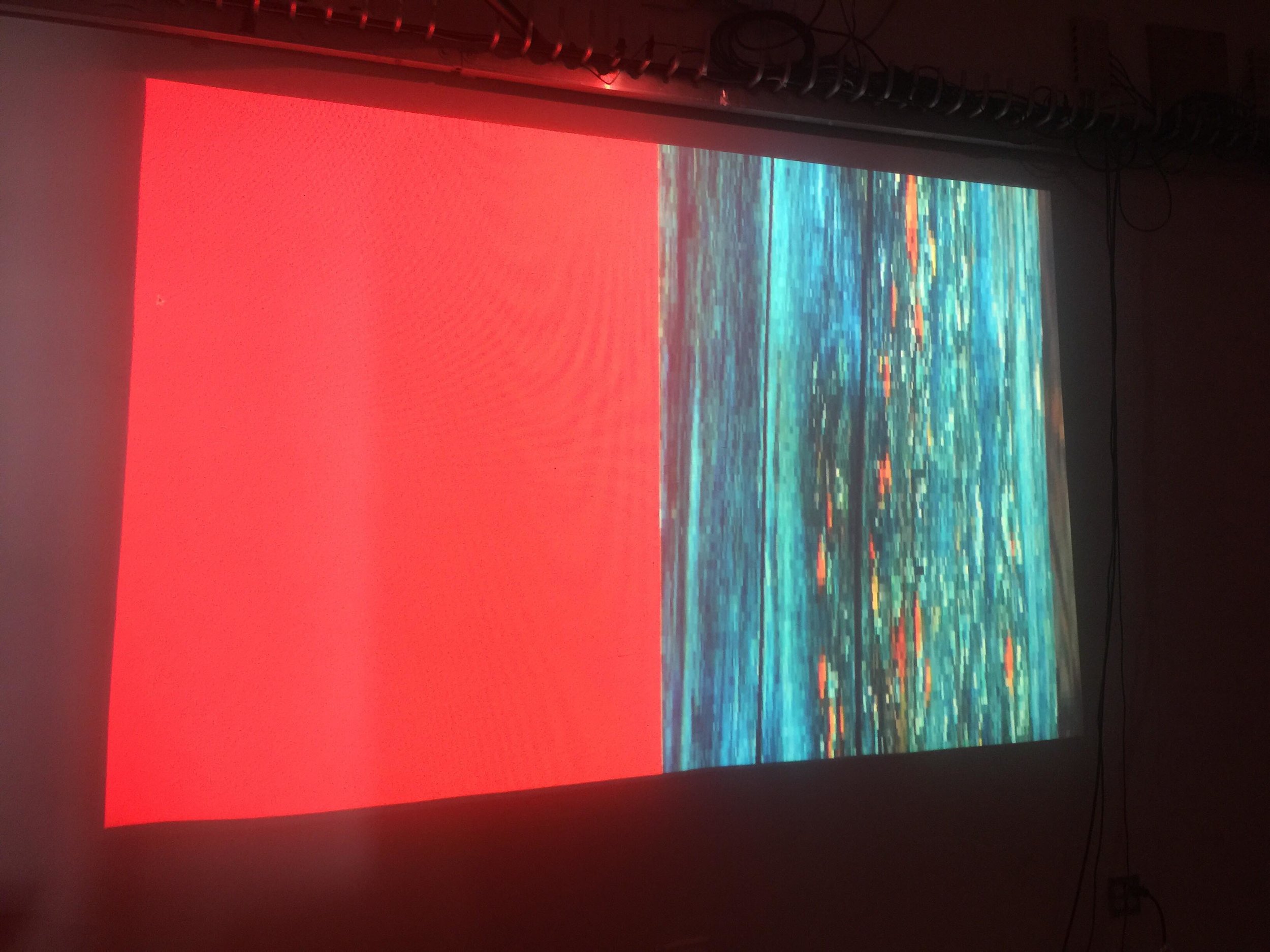

For our assignment using a Jitter patch, I used a MIDI Keyboard (the Korg Nanokey) as a controller and mapped each note to show a color on the left which correlated to the note, as well as a chosen video on the right. The video on the right was taken from a found video of a nature reel on YouTube that I cut up and zoomed in on a lot to make it pretty much unrecognizable. The interaction is that you can play the keyboard and hear a sustained note that is meant to ring out, over a constant soundtrack of nature sounds from the video which it as sourced from (wind, birds, a plane flying overhead.) The idea was to create a feeling of meandering around with no real aim or purpose, and it is intended to be played slowly.

Day 329 @ ITP: Video Sculpture - Video Mapping Test

For this project I used some panels in our classroom as a surface to assign one color to each square. I manually turned on each color which also was accompanied by its correlating sound. I will be documenting this project again with video.

Day 313 @ ITP: Video Sculpture ~ Learning video mapping with MadMapper "It moved!"

I missed the first class sadly for Video Sculpture sadly and will be making up the light sculpture assignment at some point during this course, and am looking forward to doing that. In the second class we reviewed the projects people made in collaborations for the light sculpture assignment, then learned the basics of video mapping using MadMapper. In our third class we practiced video mapping to prepare for our next assignment. I learned that it is important to lock things in place (including the projector!) and all objects that are involved. I grabbed some objects from the junk shelf and practiced mapping simple colors onto them to make them look like they are different colors. My phone was freezing and in the meantime somehow everything got moved, probably the projector when the power went off and I moved it slightly to turn it back on, then there wasn't time to fix it, and realized simple things can suddenly look sloppy if they are not perfectly on the objects, though that could potentially be played with too-- like breaking out of both the rectangle of the projection and of the objects it's locked onto, onto the wall, in the space, reflecting on objects, etc. Otherwise precision is key. It was definitely inspiring just to be able to color 3D objects this way with light, and was also thinking about blending light in this way as well with the projector light.

Video mapping on objects, a little "off."

I'm definitely having some ideas for moving some ideas I've been building on off the computer into physical reality(??), or to somewhere in between, and will most likely be expanding upon this for my final for this course, and also will try combining this idea in some ways for my finals for Creative Coding and Ideation and Prototyping at Tandon so that I can maximize my time to work on this color/tones project through all three classes before Aug 10th rolls around and time is up...the goal will be to create combinations of colors/tones that mix with each other and move over time, and will now incorporate video mapping as well for a physical version as well as a web version using Javascript. Not sure yet how many surfaces will be involved or how to install it exactly, especially in a classroom at ITP, but it will be a challenge and I want to use this opportunity to get at least a basic version of this project finished and finessed in order to iterate on it later, and perhaps then will set up in my studio to expand upon during the school year. Really, I just want to experience it.

Day 307 @ ITP: Video Sculpture

Day 301 @ (Tandon School of Engineering Online): Creative Coding

Here's what I've been up to trying to solidify my knowledge of Javascript/P5...

https://www.openprocessing.org/user/118978#sketches

Day 259 @ (Tandon School of Engineering Online): Ideation & Prototyping

Here is my process site: https://camillapc.tumblr.com/

Day 243 @ ITP: LIPP + Algorithmic Comp Final Performances

I've been absent for a few weeks but it's partially because I was working on pieces for these...

both finals happening this week!

Day 226 @ ITP: Live Image Processing & Performance

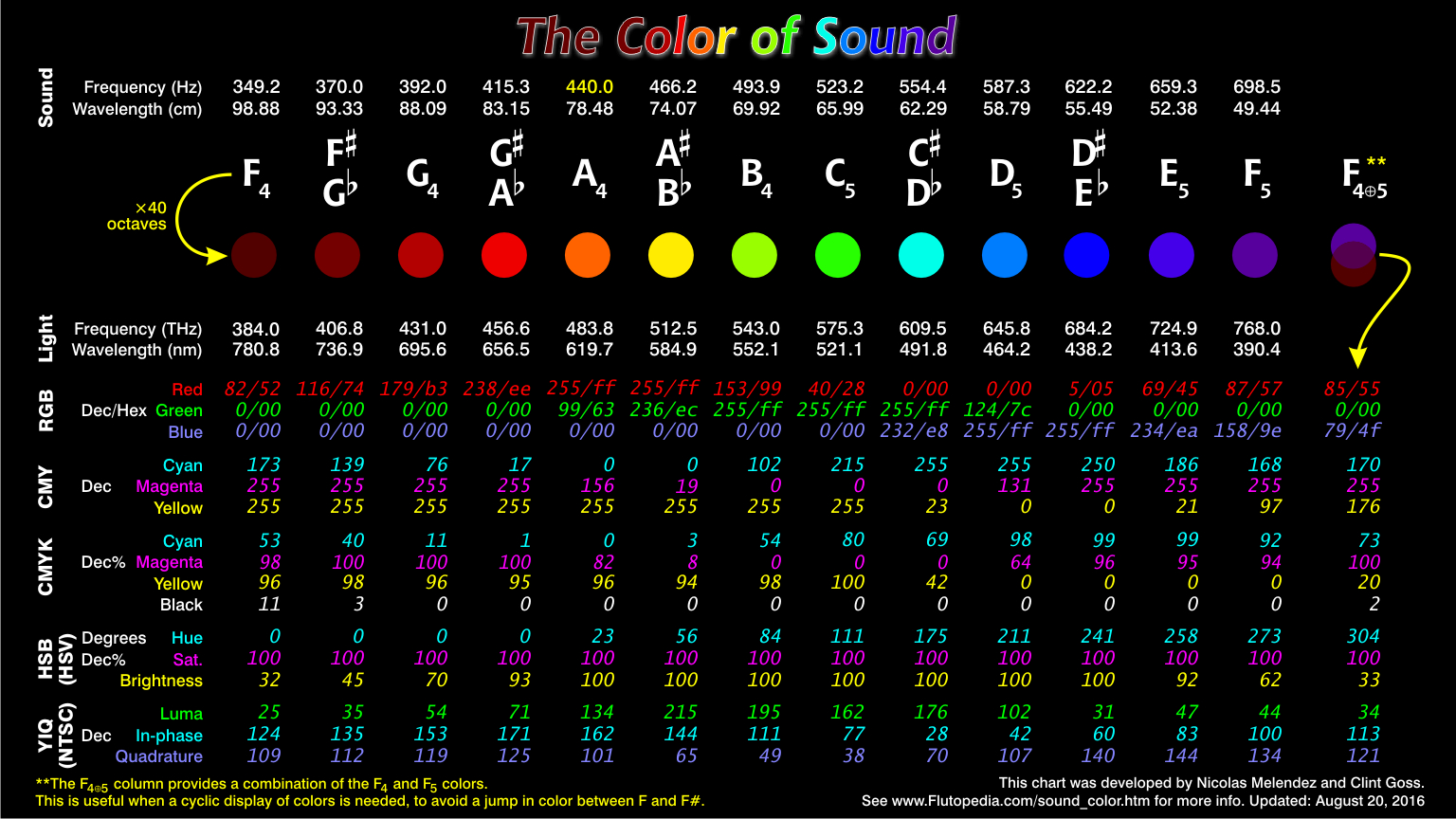

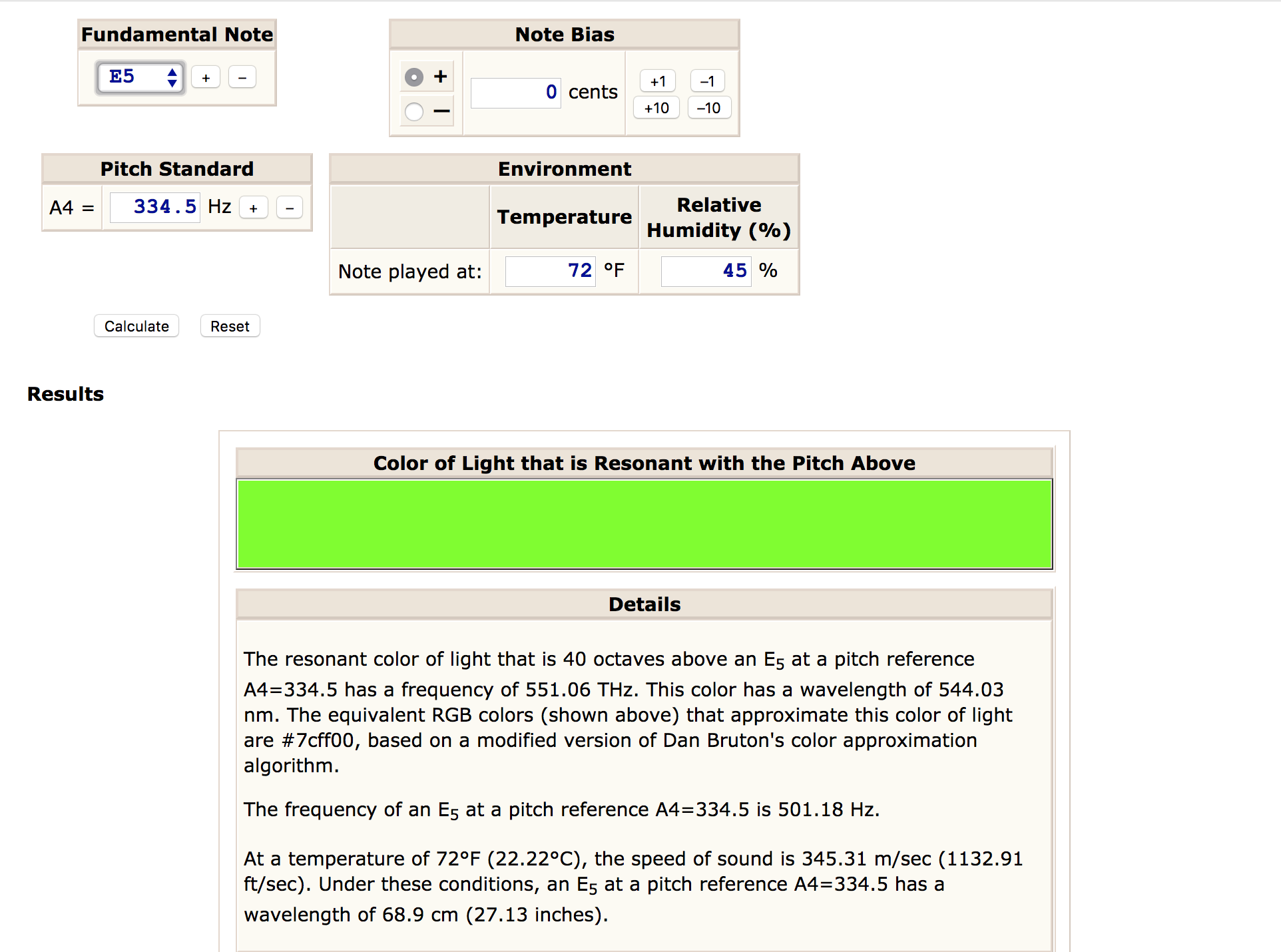

Crystal singing bowl notes ~~~> colors

My friend David measured the Hz of my crystal singing bowls for a project we are working on. So now I have a way to translate them into color, even if it is not through Max but through this website which takes the Hz of the sounds and matches them to the Thz color/frequency in light... I plan to make a chord of a few bowls at once that I would play along to, maybe in in two parts. 5 minutes isn't as long as this piece would be, I think it would be something more like 15 minutes to 20 max...but it will be a study in this idea! It also seems that the relationship is not totally direct; it is somehow translating the frequency of both sound and light into the same thing, though the waves are quite different. However it is some way to make a scientific relationship and I think will be interesting to work with.

Converting Sound to Color

The code above converts the frequency of sound to a frequency of light by doubling the sound frenency (going up one octave each time) until it reaches a frequency in the range of 400–800 THz (400,000,000,000,000 – 800,000,000,000,000 Hz).

That frequency is then converted into a wavelength of light, using the formula:

wavelength = speedOfLight / frequency

The speed of light that is used is the observed speed of light in a vacuum (299,792,458 m/sec).

I believe that this is a reasonable approach, even though we are not playing these sounds in a vacuum. The code for rendering colors (see below) is based on this same constant for speed of light; When looking at resonance, I believe that it is really the frequency of the sound and light that we want to match, not the wavelength.

Source

C crystal singing bowl = Blue #0000ff

D crystal singing bowl = Dark Red #bf0000

E bowl =

F crystal singing bowl = Blue #00dbff

G crystal singing bowl = Purple #540084

Ab/G# Crystal singing bowl = #bf0000 (same as C ??)

And second reading... = #ffad00

B Crystal Singing Bell (handle now broken but can be chimed) = #ffc700

Result:

It looks like every color of the rainbow is represented! I have yet to measure the newest bowl I got, and can only use the broken bowl I have as a bell (cannot "bowl" it anymore). I will plan to somehow gang them up next to each other and play along with them so that it is synchronized. It will be choreographed vs being triggered by sensors, but I think the idea of this came from thinking about doing it the other way around. More soon...