I've been absent for a few weeks but it's partially because I was working on pieces for these...

both finals happening this week!

Algorithmic Composition

Day 176 @ ITP: Algorithmic Composition

Class notes...

- Lewis created a code in the 80s for 4 bit systems (Atari, etc) computer is making all the decisions based on the note you played, the note you played before that, and the note you played before that...

- He talks about underlying psychometrics of jazz improvisation...their active memory while improvising is quite finite - just the last few notes you played (last 3 notes you played, and last 3 notes your partner played, etc). He used this as a philosophical prompt to build this based on a filter.

Aristid Lindenmayer

L-systems

https://en.wikipedia.org/wiki/L-system

- Put forth idea that every organism with a cellular structure has a code

- DNA of humans, plants, animals, any living thing can be used to recreate the structure w/code

PDF: The Algorithmic Beauty of Plants

Applications of Generative String Substitution Systems in Computer Music

Roger Luke DuBois

http://sites.bxmc.poly.edu/~lukedubois/dissertation/index.html

Christopher Alexander: A Pattern Language

http://library.uniteddiversity.coop/Ecological_Building/A_Pattern_Language.pdf

Day 168 @ ITP: Algorithmic Composition

Week 5

Data Sonification - Earth's Near Deaths

Nicolás E., Katya R., Camilla P.C.

OVERVIEW

Today our group met up to work on sonifying data using Csound. At first we were planning to build on the work we did on Sunday, where we created a Markov chain to algorithmically randomize the "voice" or lead flute sound from a MIDI file of "Norwegian Wood" over the guitar track using extracted MIDI notes and instruments created in Csound.

Our plan for the data sonification part of the assignment was to also take the comments from a YouTube video of the song and turn them into abstract sounds which would play over the MIDI-fied song according to their timestamp, using sentiment analysis to also change the comments' sounds according to their positive, neutral or negative sentiments. However, upon trying to implement our ideas today we found out that the process of getting sentiment analysis to work is very complicated, and the documentation online consists of many forums and disorganized information on how to do it without clear directives that we could follow.

While we may tackle sentiment analysis later on either together or in our own projects, we decided that for this assignment it would suffice, and also be interesting to us, to use another data set and start from scratch for the second part of our project together. We searched for free data sets and came across a list of asteroids and comets that flew close to earth here (Source: https://github.com/jdorfman/awesome-json-datasets#github-api).

We built 9 instruments and parsed the data to have them play according to their 9 classifications, as well as their dates of discovery, years of discovery, and locations over a 180 degree angle, as well as each sound reoccur algorithmically at intervals over the piece according to their periods of reoccurrence. We also experimented with layering the result over NASA's "Earth Song" as a way to sonify both the comets and asteroids (algorithmically, through Csound) and Earth (which they were flying over). The result was cosmic to say the least (pun intended!)

Here are the two versions below.

PYTHON SCRIPT

By Nicolas E.

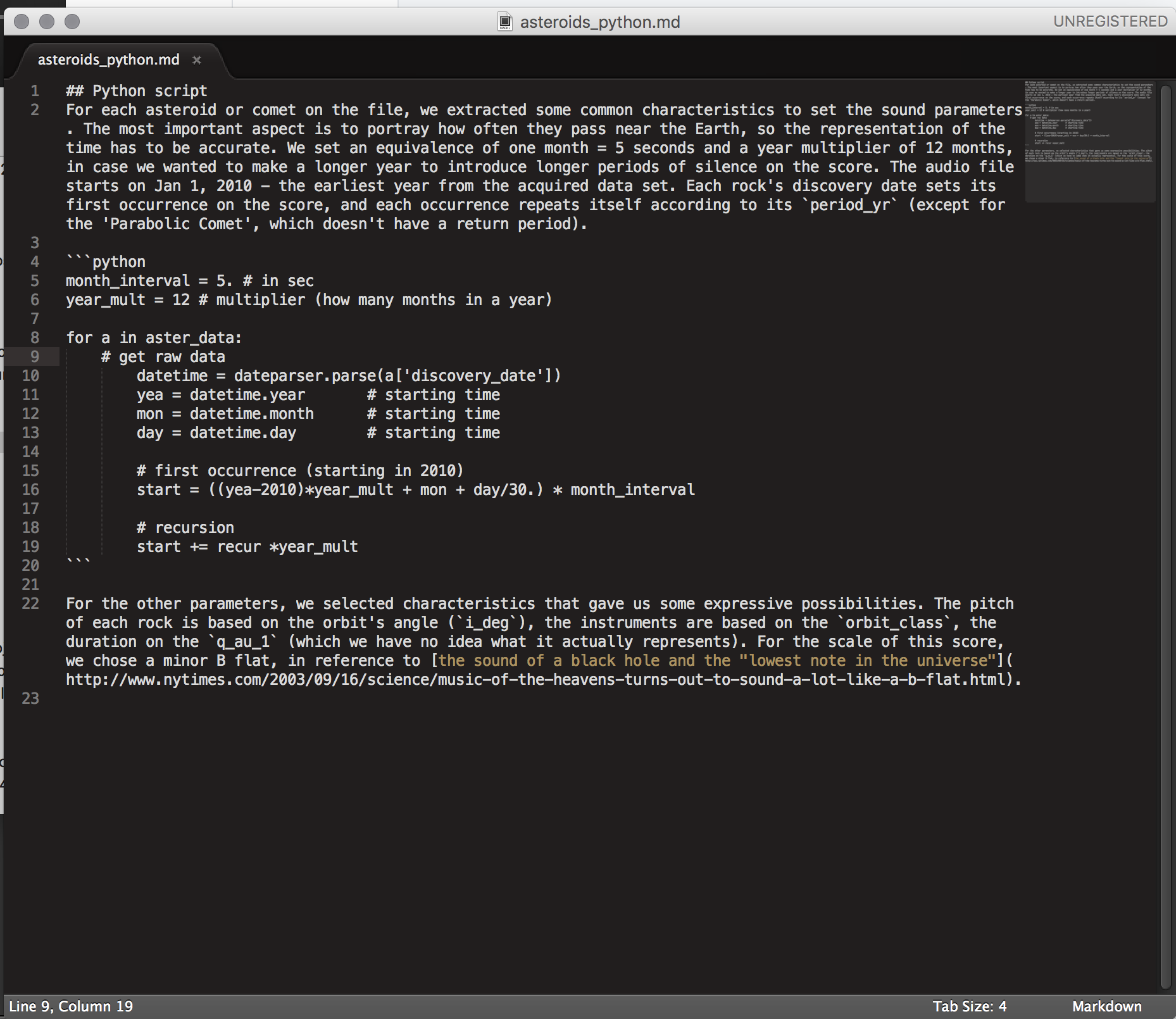

For each asteroid or comet on the file, we extracted some common characteristics to set the sound parameters. The most important aspect is to portray how often they pass near the Earth, so the representation of the time has to be accurate. We set an equivalence of one month = 5 seconds and a year multiplier of 12 months, in case we wanted to make a longer year to introduce longer periods of silence on the score. The audio file starts on Jan 1, 2010 - the earliest year from the acquired data set. Each rock's discovery date sets its first occurrence on the score, and each occurrence repeats itself according to its period_yr (except for the 'Parabolic Comet', which doesn't have a return period).

month_interval = 5. # in sec year_mult = 12 # multiplier (how many months in a year) for a in aster_data: # get raw data datetime = dateparser.parse(a['discovery_date']) yea = datetime.year # starting time mon = datetime.month # starting time day = datetime.day # starting time # first occurrence (starting in 2010) start = ((yea-2010)*year_mult + mon + day/30.) * month_interval # recursion start += recur *year_mult

For the other parameters, we selected characteristics that gave us some expressive possibilities. The pitch of each rock is based on the orbit's angle (i_deg), the instruments are based on the orbit_class, the duration on the q_au_1 (which we have no idea what it actually represents). For the scale of this score, we chose a minor B flat, in reference to the sound of a black hole and the "lowest note in the universe".

INSTRUMENTS

by Katya R.

The first three corresponded to the three most common occurring meteors and asteroids. These are subtle "pluck" sounds. A pluck in CSound produces naturally decaying plucked string sounds.

The last six instruments consisted of louder, higher frequency styles.

Instrument four is a simple oscillator.

Instrument five, six, and eight are VCO, analog modeled oscillators, with a sawtooth frequency waveform.

Instrument seven is a VCO with a square frequency waveform.

Instrument nine is a VCO with a triangle frequency waveform.

linseg is an attribute we used to add some vibrato to instruments 6 - 9. It traces a series of line segments between specified points. These units generate control or audio signals whose values can pass through 2 or more specified points.

Each instrument's a-rate takes variables p4, p5, and p6, (which we set to frequency, amplitude, and pan) that correspond to values found in the JSON file under each instance of a meteor/asteroid near Earth. The result is a series of plucking sounds with intermittent louder and higher frequency sounds with some vibrato. The former represent to the more common smaller meteors and asteroids and the latter represent the rare asteroid and meteor types.

Poetry/code in motion ~ Photo by Katya R.

Description of our code by Nicolás E. ~ See the full project on GitHub here

Day 162 @ ITP: Algorithmic Composition

Working in CSound

Using python to create a sound file (Via Nicolás E.)

Ok, so I use it from the terminal. I have my orc and sco files (orchestra and score) and I use the csound command. It's something like this: csound blah.orc blah.sco -o foo.wav (where -o is for specifying the output).

The other option is to do everything on the csound "app" The .csd files are kinda formatted like html, so it's easy to see the different parts. You have the <CsInstruments> for the instruments, the <CsScore> for the score. What Luke always does in class, is create the instruments, then runs a code to create the score, and he pastes it on that section...

His scripts print everything to the terminal, so you need to tell it to take all those logs and put them in a file. You do that with ">". So, if your script is "score.js", you'd do: node score.js > output.txt (or output.sco if you want to run csound later from the command line) (I do it that way)

Other resources:

An Instrument Design TOOTorial

http://www.csounds.com/journal/issue14/realtimeCsoundPython.html

I had to miss class today because I was worried I was coming down with something, but I am spending the afternoon trying to get at least *1* composition working in Csound. Yesterday I sat with a classmate for a while and, with the help of Nicolás above, we figured out more how to navigate the way that Csound works using the terminal to save and render files. At first Csound felt very unintuitive and impenetrable but I am starting to understand the incredible potential it has for creating complex instruments and scores. The next few weeks for me will be about just generating some stuff, to see what happens, starting with today. More in a bit.

Update 2/19:

Nicolás E. and Katya R. and I are working together, which is very helpful because I am totally lost in terms of how to get the code to function properly for this (!), but I think we are all learning. We decided to go with a MIDI file of Norwegian Wood (based on Luke's joke from class which includes how it is #45's favorite song to karaoke to...) and made a markov chain to randomize the "voice" track (flute lead) and remix the notes within the notes that are originally in the song. We also extracted the guitar notes and play them in order.

Our next step is to take comments made on the YouTube video for Norwegian Wood and insert them all as sounds that play along with the song, interrupting it, possibly with sentiment analysis controlling how they sound (probably just positive vs negative vs neutral, high to middle to low or something like that) if we can figure that out by Wednesday... : ) We had some help and advice also from the resident Hannah, who does work with generative music and sentiment analysis. It is fun to work in a group for this, and also to combine (possibly also just learn to compromise) our lofty concepts with also just learning how these generative processes can work and learning about the code needed to get there, at least using Csound...

Our group's project on GitHub: https://github.com/nicolaspe/comment_me

Audio from the first stage...

Day 157 @ ITP: Thinking about Algorithmic Composition + LIPP

2/9

I was thinking it could be fun to somehow very specifically link up notes and colors. I don't know how easy that is to accomplish. Something like this below video that I found:

It seems to be mapping the sounds very specifically with the colors. So it is not triggering them through analyzing the sounds on the other end, but rather triggering an associated visual every time a sound is played... So it's not so much audio reactive but "input reactive".. Would it be possible to have a slightly different visual for a range of up to ~127 individual inputs?

I also would like to somehow trigger light in or on crystal singing bowls when they are played... possibly with projection mapping? Or, the bowls could also turn on a physical light inside them when I hit them with a mallet (though it might make the bowls vibrate sonically to have a light inside them, so would have to think of a way to do it that wouldn't affect the sound). But that would be a different scenario...maybe in tandem with the algorithmically generated music and visuals which are responding to the notes. Possibly the bowls could also be affecting the projections so there is a symbiosis there as well. Here is an example of someone doing something similar (but with lights that change color, and without turning off):

Update 2/12 (for LIPP)

I have been reading a lot and thinking about the kinds of systems that I would like to work on in general and using the tools in our class.

I am definitely very influenced at the moment by the Light and Space artists I am reading about for Recurring Concepts in Art, and installations by James Turrell and others who work with perception. I would like to create something in this school or vein but also with incorporating sound, and slowly fading in between a series of "places" that create an arc of experience. So the key word is "slow" - but constantly moving and shifting, maybe with some central focus point that could also have elements be introduced or taken away or faded away rather than flashing in and out which seems to be what a lot of video effects are about...

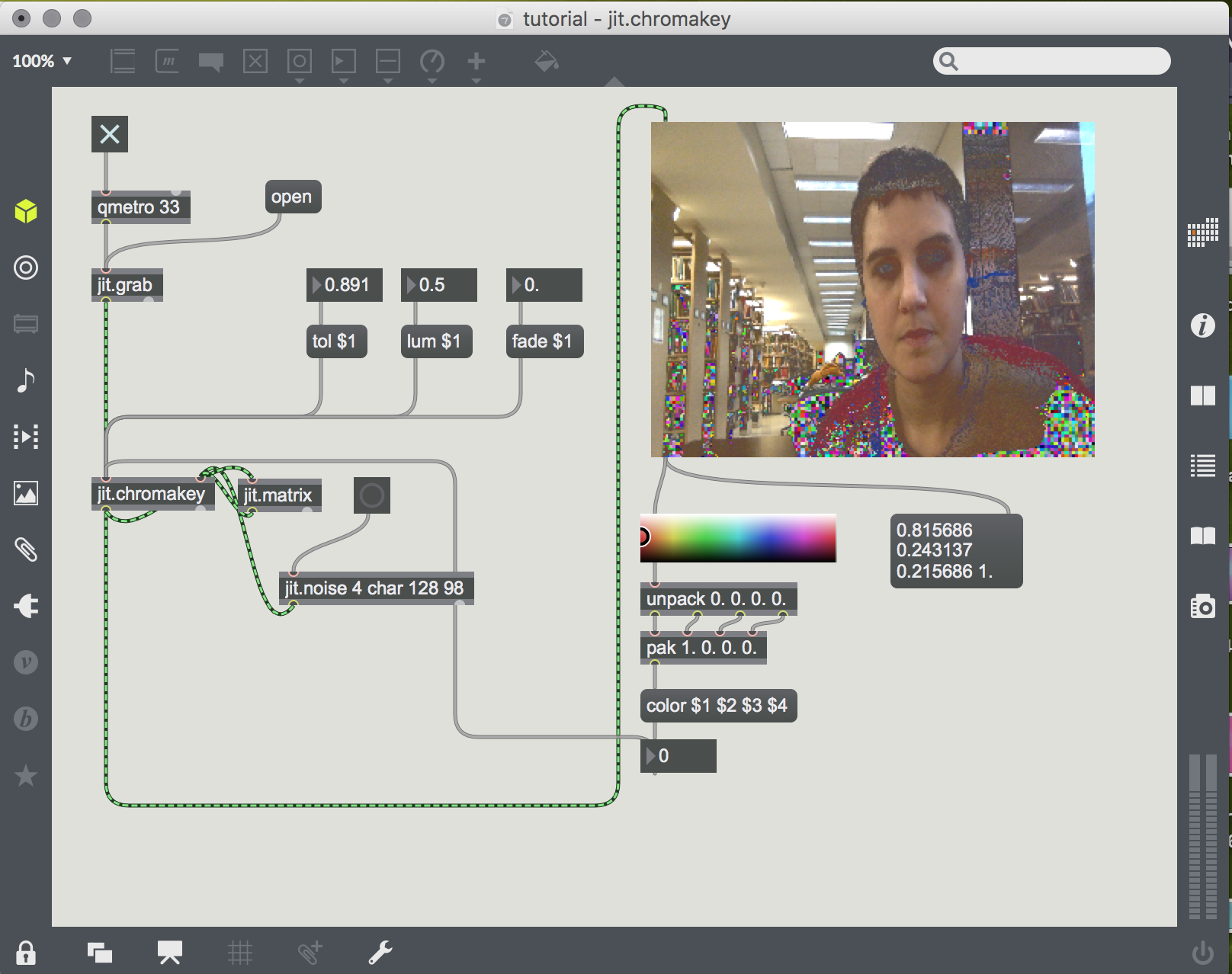

I also realize that a lot of figuring this out will also be from raw experimentation, and I still need to do a lot more of that. I have been following the tutorial videos for our class but have not worked much more on my own Max patch yet(!), but plan to really get into it over the next two weeks. I still need to work more with the jit.smooth function, I tried to add it but it turned orange, so I was wondering if we could go over this in my office hours appt with you (this Friday from 2:40-3pm)?

I attended two live audio-visual performances this past week, the one at 3 Legged Dog last Saturday, and one this past Saturday as well at Spectrum in Brooklyn which I was also playing music in, where a video artist did live visuals for each of the 4 acts, and I was wondering if I could do a review of some kind of amalgamation of a few sets from different shows instead of just one event. Other than being a little stuck with Max at the moment I have been feeling inspired by various things in relation to our class!

Bobst library stacks w/ jit.lumakey tutorial