Final project : Color Theremin V2

WHAT IT IS

The Color Theremin is a gesture-controlled musical instrument which plays and manipulates sound samples based on my hand movements, with customizable lights triggered along with the sounds in an accompanying light sculpture.

WHY I MADE IT

I have for many years been interested in the interaction between sound and light. I made this instrument to have a more expressive way to manipulate sounds along with an interactive light component, and to have a new and fun way for me to perform create/record music. I was also inspired by some of the exercises we did in class using granular synthesis and manipulation of samples along the x/y axes.

While I was also thinking the Color Theremin could potentially be a standalone interactive installation, it needs work. I will be continuing to work with different iterations of the project to improve the light/sound interactions.

HOW IT WORKS

The samples are triggered by hand movements on X, Y and Z axes. Moving the hand left <~> right, up <~> down, forward <~> backward triggers the samples and also manipulates the speed and volume at which they are played. The core elements of the setup are Max MSP and MadMapper, as well as addressable LEDs for the light component.

THE CODE

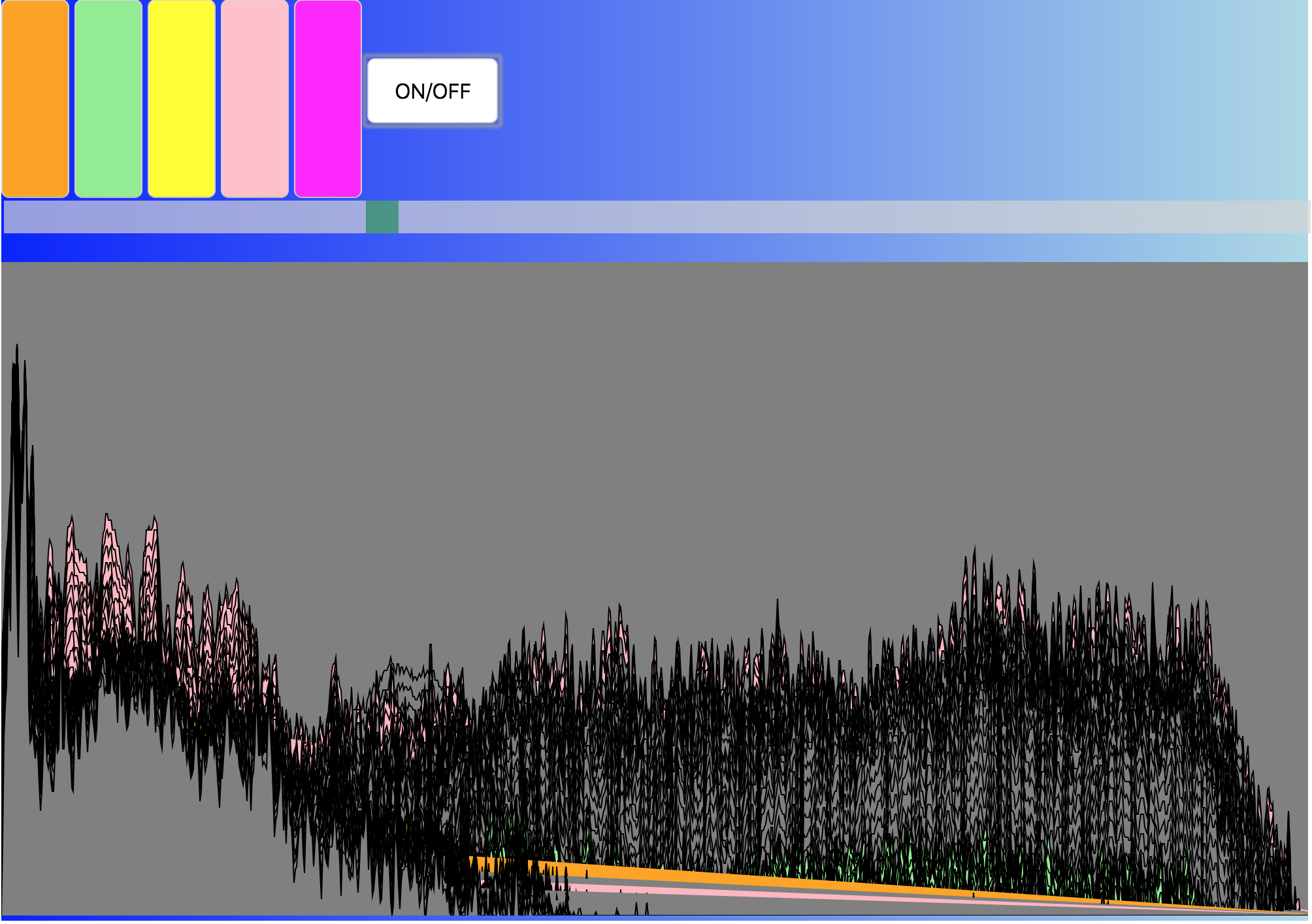

The Color Theremin V2 was programmed entirely using Max MSP for the sound, sending MIDI control changes to MadMapper to trigger the LEDs.

SCREEN CAPTURE/VIDEO