Day 169 @ ITP: Recurring Concepts in Art

Week 5 Readings

Shigeko Kubota's Vagina Painting (1965) was a performance in which she crouched over a large sheet of paper on the floor and painted with a red-daubed paintbrush attached to her knickers.

Her video art on EAI website: https://www.eai.org/artists/shigeko-kubota/titles

Victor Turner's notion of liminality is a state of 'betwixt and between, a fructile chaos, a storehouse of possibilities' (1982). Other writers have also described some kind of position outside binary thinking, a state disruptive of unity and closure.

Informe, according to Bataille, has no definition but is performative, like an obscene word. It performs the operation of creating taxonomic disorder and a perpetual maintenance of potentials.

In Purity and Danger anthropologist Mary Douglas discussed pollution taboos concerning the unassimilable waste that is outside the constitution of things that are defined (1966). And the Brazilian artist Helio Oiticica argued that art has no autonomous object state, but is instead a searching process, a constructive nucleus, an enactment (1969).

Whilst Turner's limen is the threshold and a striving after new forms and structures, Bataille's informe is an inchoateness through which meaning briefly emerges, and Douglas describes pollution and dirt as a 'fearful generative site'.

Of course there are many differences between the ideas sketchily outlined above, but the focus of this article is a perception of a shared notion in liminality, informe, pollution and process art of an oscillating flux that does not halt. This is an idea that is also present in contemporary scientific developments in chaos theory and quantum physics. This article discusses this oscillating flux in relation to a range of visual artists using their own bodies in their artworks - in performance, painting, sculpture, photography, film and video.

Visual artists using their own bodies as the site for art wreak havoc with categorisation from several angles. The artist's body is an art object that will not stay put and fixed in its role, it is contingent and gets up and walks back into the artist's life. As art object the artist's body is always ephemeral.

The liminal, the informe, the abject and the taboo undo the work of rationalisation. According to French Fluxus artist Ben Vautier, art is dirty work but somebody has to do it. And 'messy' body artists such as Carolee Schneemann, the Viennese Actionists and Paul McCarthy certainly bear him out. Janine Antoni washed and painted a gallery floor with her hair in the performance, Loving Care (1992). Cheryl Donegan made prints of shamrocks with her green paint-smeared buttocks in the video Kiss My Royal Irish Arse(1993).

Gilles Deleuze's analysis of Francis Bacon's paintings emphasises their depiction of the human body as 'meat' (1981: 197-198). Talking about the effect he wanted in his paintings Bacon said, 'I would like my pictures to look as if a human being had passed between them, like a snail, leaving a trail of the human presence and memory trace of past events, like the snail leaves its slime' (cited in Chipp, 1968: 621).

Marcel Duchamp's Sinning Landscape (1946) was made with semen on black velvet.

Chilean artists Diamela Eltit and Raul Zurita made performances in the 80s using their own bodies, protesting against inhumanity in an oppressive regime. Critic Nelly Richard comments on their work,

The threshold of pain enables the mutilated subject to enter areas of

collective identification, sharing in one's own flesh the same signs of

social disadvantage as the other unfortunates. Voluntary pain simply

legitimates one's incorporation into the community of those who have

been harmed in some way - as if the self-inflicted marks of chastisement

in the artist's body and the marks of suffering in the national body, as

if pain and its subject could unite in the same scar (1986: 66, 68).

Filmmaker David Cronenberg has described the basis of horror as the fact that we cannot comprehend how we can die (cited in Kaufmann, 1998). At the same time medical, scientific and technological advances relating to the body's health, reproductive function and death, seem to make the body's functions increasingly conceptual and euphemised.

Mircea Eliade writes that, 'The archaic and Oriental cultures succeeded in conferring positive values on anxiety, death, self-abasement and upon chaos' (1960: 14). But in Western culture death and the body as flux is still a taboo vision. Most critiques of this type of body art cannot get past the Western cultural obsession with the central, terminal, cumulative self - the individual ego, to see beyond to a use of the self as universal. Talking about Dada dance, Hugo Ball commented that 'Dance … is very close to the art of tattooing and to all primitive representative efforts that aim at personification' (1996). With his use of the word 'personification' Ball seems to be getting at a notion of the individual body inscribed, carrying the weight of collective ideas, rather than the individual engaged in self-expression.

Marina Abramovic and Ulay sat opposite each other across a table for a total of 90 days, not moving, not speaking and fasting. The complete performance was undertaken over several chunks of time in different cities around the world. Their longest continuous presentation lasted 16 days. The artists presented themselves as embodied consciousnesses, in the process of being. For Abramovic the job of the artist is to reveal the mystery of existence and to act as a transmittor of energy. 'The deeper you go into yourself, the more universal you come out on the other side' (quoted in Pijnappel, 1995).

Susan Hiller's Draw Together (1972) was an experiment in telepathy and Dream Mapping (1974) was an experiment in group dreaming. Hiller describes art ideas as existing below a verbal recognition level where artists grab on to them (see Einzig, 1996). James Turrell's work experiments with perceptual psychology, light and states of being. He has remarked that art is about bringing images back from the dream world to here. Shelley Sacks' Thought Bank (1994) was based on the idea that water remembers and that thought can be imprinted on water. In live performances Bruce Gilchrist has attempted to externalise images and sounds from the interior of his sleeping body (Divided by Resistance, 1996) (see Keidon, 1996 and Warr, 1996). Working with a BBC Outside Broadcast Unit, a group of mediums, a thermal camera and sound equipment, Kathleen Rogers' PsiNet (1994) set up a parallel between psychic transmission and reception and technological transmission and reception (see La Frenais, 1994).

Speech has been over-emphasised as the privileged means of human

communication, and the body neglected. It is time to rectify this

neglect and to become aware of the body as the physical channel of

meaning. (Douglas, 1978: 298)

Janine Antoni washed and painted a gallery floor with her hair in the performance, Loving Care (1992).

The body is the proof of identity. The body is language. My consciousness of the body as such became so strong that it became a pressure I couldn't get rid of. I wanted to grasp this consciousness and get rid of the pressure in my painting, but I found that painting for me lacked the possibility of expressing the directness that I felt through contact with the body. Furthermore, painting could not make me feel the existence of my body in my work. I realized that any medium beyond my body seemed too remote from myself. Thus, I decided that the only way I could be an artist was by using my body as the basic medium and language of my art.

...the tendency of self-torturing is not just a personal problem. It is a common phenomenon, especially so in the present circumstances of China today. In the suburban area of Beijing where we live, there also live thousands of peasants who come from all over the country to make a living selling vegetables. Every morning they have to get up at four o'clock for their work. I believe they wish they could have more time for sleep, like the rest of us. But they can't. If one has t o do something one doesn't want t o do, that is a kind

of self-torturing. Everybody has this tendency. Some are conscious of it, while others don't want to admit it. —Zhang Huan

Day 168 @ ITP: Algorithmic Composition

Week 5

Data Sonification - Earth's Near Deaths

Nicolás E., Katya R., Camilla P.C.

OVERVIEW

Today our group met up to work on sonifying data using Csound. At first we were planning to build on the work we did on Sunday, where we created a Markov chain to algorithmically randomize the "voice" or lead flute sound from a MIDI file of "Norwegian Wood" over the guitar track using extracted MIDI notes and instruments created in Csound.

Our plan for the data sonification part of the assignment was to also take the comments from a YouTube video of the song and turn them into abstract sounds which would play over the MIDI-fied song according to their timestamp, using sentiment analysis to also change the comments' sounds according to their positive, neutral or negative sentiments. However, upon trying to implement our ideas today we found out that the process of getting sentiment analysis to work is very complicated, and the documentation online consists of many forums and disorganized information on how to do it without clear directives that we could follow.

While we may tackle sentiment analysis later on either together or in our own projects, we decided that for this assignment it would suffice, and also be interesting to us, to use another data set and start from scratch for the second part of our project together. We searched for free data sets and came across a list of asteroids and comets that flew close to earth here (Source: https://github.com/jdorfman/awesome-json-datasets#github-api).

We built 9 instruments and parsed the data to have them play according to their 9 classifications, as well as their dates of discovery, years of discovery, and locations over a 180 degree angle, as well as each sound reoccur algorithmically at intervals over the piece according to their periods of reoccurrence. We also experimented with layering the result over NASA's "Earth Song" as a way to sonify both the comets and asteroids (algorithmically, through Csound) and Earth (which they were flying over). The result was cosmic to say the least (pun intended!)

Here are the two versions below.

PYTHON SCRIPT

By Nicolas E.

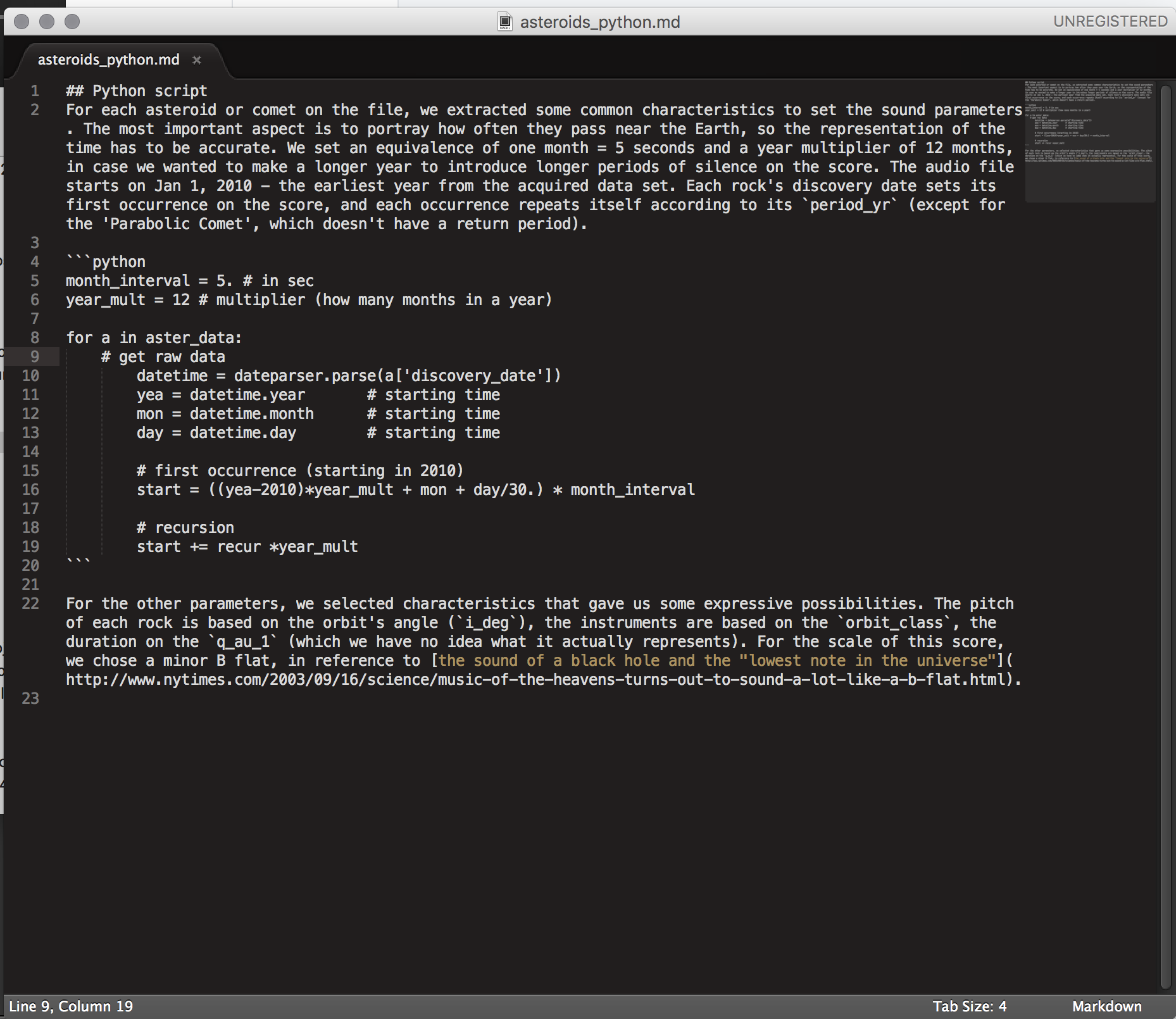

For each asteroid or comet on the file, we extracted some common characteristics to set the sound parameters. The most important aspect is to portray how often they pass near the Earth, so the representation of the time has to be accurate. We set an equivalence of one month = 5 seconds and a year multiplier of 12 months, in case we wanted to make a longer year to introduce longer periods of silence on the score. The audio file starts on Jan 1, 2010 - the earliest year from the acquired data set. Each rock's discovery date sets its first occurrence on the score, and each occurrence repeats itself according to its period_yr (except for the 'Parabolic Comet', which doesn't have a return period).

month_interval = 5. # in sec year_mult = 12 # multiplier (how many months in a year) for a in aster_data: # get raw data datetime = dateparser.parse(a['discovery_date']) yea = datetime.year # starting time mon = datetime.month # starting time day = datetime.day # starting time # first occurrence (starting in 2010) start = ((yea-2010)*year_mult + mon + day/30.) * month_interval # recursion start += recur *year_mult

For the other parameters, we selected characteristics that gave us some expressive possibilities. The pitch of each rock is based on the orbit's angle (i_deg), the instruments are based on the orbit_class, the duration on the q_au_1 (which we have no idea what it actually represents). For the scale of this score, we chose a minor B flat, in reference to the sound of a black hole and the "lowest note in the universe".

INSTRUMENTS

by Katya R.

The first three corresponded to the three most common occurring meteors and asteroids. These are subtle "pluck" sounds. A pluck in CSound produces naturally decaying plucked string sounds.

The last six instruments consisted of louder, higher frequency styles.

Instrument four is a simple oscillator.

Instrument five, six, and eight are VCO, analog modeled oscillators, with a sawtooth frequency waveform.

Instrument seven is a VCO with a square frequency waveform.

Instrument nine is a VCO with a triangle frequency waveform.

linseg is an attribute we used to add some vibrato to instruments 6 - 9. It traces a series of line segments between specified points. These units generate control or audio signals whose values can pass through 2 or more specified points.

Each instrument's a-rate takes variables p4, p5, and p6, (which we set to frequency, amplitude, and pan) that correspond to values found in the JSON file under each instance of a meteor/asteroid near Earth. The result is a series of plucking sounds with intermittent louder and higher frequency sounds with some vibrato. The former represent to the more common smaller meteors and asteroids and the latter represent the rare asteroid and meteor types.

Poetry/code in motion ~ Photo by Katya R.

Description of our code by Nicolás E. ~ See the full project on GitHub here

Day 167 @ ITP: Live Image Processing & Performance

I spent this rainy day at home playing with Max, slowly getting somewhere finally after a lot of trial and error. I tried making a 3 or 4 channel video mixer in various ways with the jit.fade and also other preset mixers in the Vizzie section, but for some reason it always either made Max crash immediately upon trying to use it or made the video glitch (probably due to some signal mixing that I am not aware of). I decided to just stick to two channels and play with some basic effects to avoid crashing and to get the hang of that at least, and make something with some limitations first, and hopefully add Touch Osc before next Monday to control some parameters with. I also took out the random factor on the fader and turned it into a basic mixer dial which blends the two channels, and added an extra "jit.slide" module from the presets to blur one side more, which essentially does the same thing but has dials built in. I would also like to add dials to the jit.brcosa and other functions to have everything be manipulatable that way, but was wondering if there is also a way to make it change within a certain range (like 0 to 1 for brightness, instead of 0 to 500, etc.)

I am thinking now that I will just play with the video clips' audio and do a collage with the videos I made for week one, incorporating both audio and video together to sequence through ~7 stages in a sort of humorous chakra journey (which is also something I am working with in general as a concept--if not the traditional chakras then some kind of sequence like that with different sounds and colors). It will be incorporating people making tactile (non-computer) art and the music they were listening to, and what they were talking about, sometimes chopped and screwed. I may or may not also sync it with 3 minutes of a backing track that helps to sonically demarcate where the changes happen. I also experimented with adding the jit.op math operations to blend layers which does similar things to the blending layers function in Photoshop, but decided that I will not use them at least now, though am glad to know that option exists!

Realizing more deeply that everything behind art and music (at least that is made on the computer or with electronics) is directly related to math. Also I guess everything in the universe probably. Wish I was better at math but am also just glad to see the underbelly of this whole world and know what it is made of a little more intimately!

My abandoned 4 channel mixer..

Next I will narrow down and edit the video clips I want to use, and experiment with adding audio/music of my own, then try to go through a demo sequence and record it.

My current set up - back to the 2 channel video mixer with some modifications.

Day 162 @ ITP: Algorithmic Composition

Working in CSound

Using python to create a sound file (Via Nicolás E.)

Ok, so I use it from the terminal. I have my orc and sco files (orchestra and score) and I use the csound command. It's something like this: csound blah.orc blah.sco -o foo.wav (where -o is for specifying the output).

The other option is to do everything on the csound "app" The .csd files are kinda formatted like html, so it's easy to see the different parts. You have the <CsInstruments> for the instruments, the <CsScore> for the score. What Luke always does in class, is create the instruments, then runs a code to create the score, and he pastes it on that section...

His scripts print everything to the terminal, so you need to tell it to take all those logs and put them in a file. You do that with ">". So, if your script is "score.js", you'd do: node score.js > output.txt (or output.sco if you want to run csound later from the command line) (I do it that way)

Other resources:

An Instrument Design TOOTorial

http://www.csounds.com/journal/issue14/realtimeCsoundPython.html

I had to miss class today because I was worried I was coming down with something, but I am spending the afternoon trying to get at least *1* composition working in Csound. Yesterday I sat with a classmate for a while and, with the help of Nicolás above, we figured out more how to navigate the way that Csound works using the terminal to save and render files. At first Csound felt very unintuitive and impenetrable but I am starting to understand the incredible potential it has for creating complex instruments and scores. The next few weeks for me will be about just generating some stuff, to see what happens, starting with today. More in a bit.

Update 2/19:

Nicolás E. and Katya R. and I are working together, which is very helpful because I am totally lost in terms of how to get the code to function properly for this (!), but I think we are all learning. We decided to go with a MIDI file of Norwegian Wood (based on Luke's joke from class which includes how it is #45's favorite song to karaoke to...) and made a markov chain to randomize the "voice" track (flute lead) and remix the notes within the notes that are originally in the song. We also extracted the guitar notes and play them in order.

Our next step is to take comments made on the YouTube video for Norwegian Wood and insert them all as sounds that play along with the song, interrupting it, possibly with sentiment analysis controlling how they sound (probably just positive vs negative vs neutral, high to middle to low or something like that) if we can figure that out by Wednesday... : ) We had some help and advice also from the resident Hannah, who does work with generative music and sentiment analysis. It is fun to work in a group for this, and also to combine (possibly also just learn to compromise) our lofty concepts with also just learning how these generative processes can work and learning about the code needed to get there, at least using Csound...

Our group's project on GitHub: https://github.com/nicolaspe/comment_me

Audio from the first stage...

Day 160 @ ITP: LIPP Tutorials

All the tutorials from class go here...

Day 160 @ ITP: Recurring Concepts in Art

Class Notes