Week 5

Data Sonification - Earth's Near Deaths

Nicolás E., Katya R., Camilla P.C.

OVERVIEW

Today our group met up to work on sonifying data using Csound. At first we were planning to build on the work we did on Sunday, where we created a Markov chain to algorithmically randomize the "voice" or lead flute sound from a MIDI file of "Norwegian Wood" over the guitar track using extracted MIDI notes and instruments created in Csound.

Our plan for the data sonification part of the assignment was to also take the comments from a YouTube video of the song and turn them into abstract sounds which would play over the MIDI-fied song according to their timestamp, using sentiment analysis to also change the comments' sounds according to their positive, neutral or negative sentiments. However, upon trying to implement our ideas today we found out that the process of getting sentiment analysis to work is very complicated, and the documentation online consists of many forums and disorganized information on how to do it without clear directives that we could follow.

While we may tackle sentiment analysis later on either together or in our own projects, we decided that for this assignment it would suffice, and also be interesting to us, to use another data set and start from scratch for the second part of our project together. We searched for free data sets and came across a list of asteroids and comets that flew close to earth here (Source: https://github.com/jdorfman/awesome-json-datasets#github-api).

We built 9 instruments and parsed the data to have them play according to their 9 classifications, as well as their dates of discovery, years of discovery, and locations over a 180 degree angle, as well as each sound reoccur algorithmically at intervals over the piece according to their periods of reoccurrence. We also experimented with layering the result over NASA's "Earth Song" as a way to sonify both the comets and asteroids (algorithmically, through Csound) and Earth (which they were flying over). The result was cosmic to say the least (pun intended!)

Here are the two versions below.

PYTHON SCRIPT

By Nicolas E.

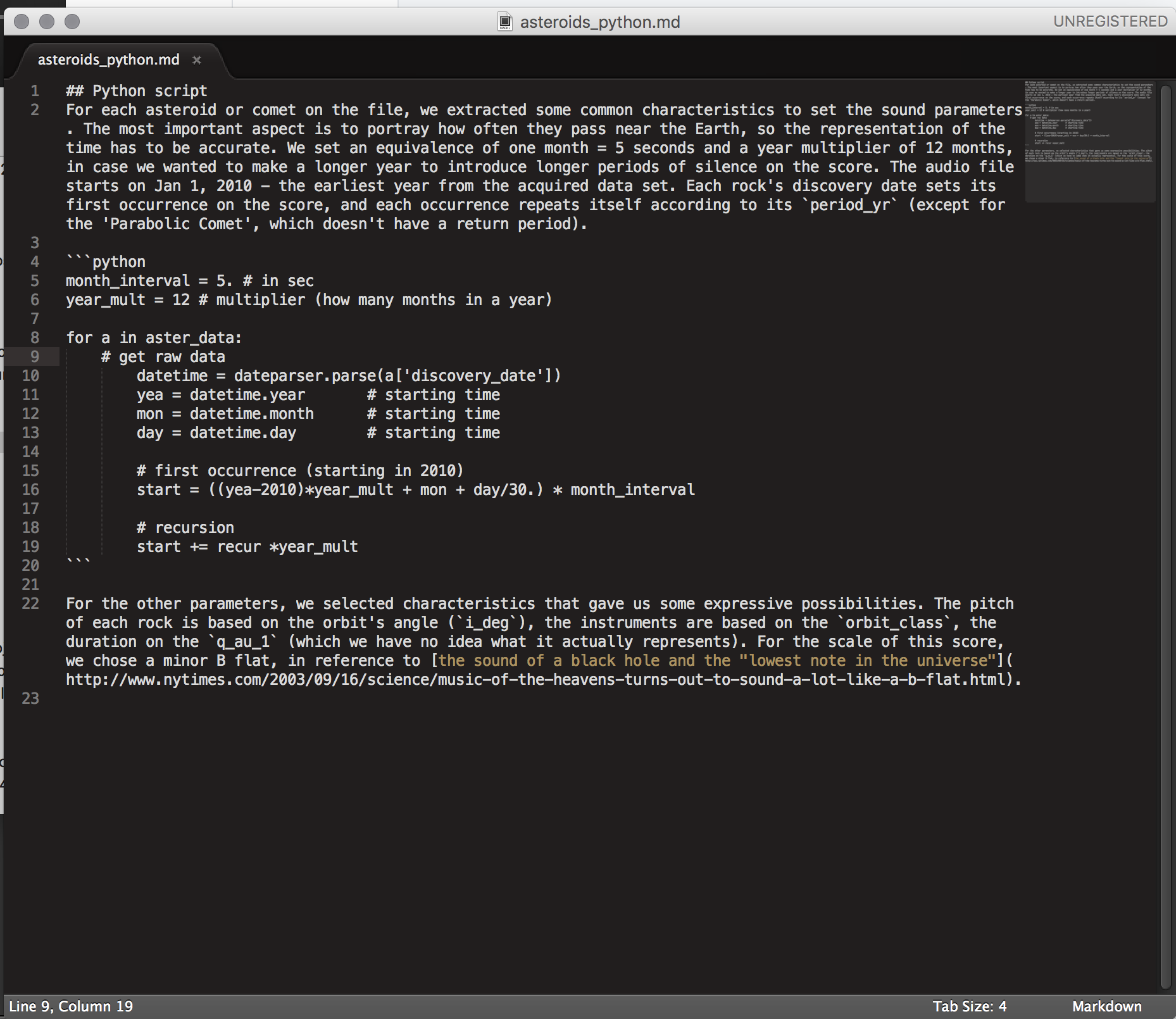

For each asteroid or comet on the file, we extracted some common characteristics to set the sound parameters. The most important aspect is to portray how often they pass near the Earth, so the representation of the time has to be accurate. We set an equivalence of one month = 5 seconds and a year multiplier of 12 months, in case we wanted to make a longer year to introduce longer periods of silence on the score. The audio file starts on Jan 1, 2010 - the earliest year from the acquired data set. Each rock's discovery date sets its first occurrence on the score, and each occurrence repeats itself according to its period_yr (except for the 'Parabolic Comet', which doesn't have a return period).

month_interval = 5. # in sec year_mult = 12 # multiplier (how many months in a year) for a in aster_data: # get raw data datetime = dateparser.parse(a['discovery_date']) yea = datetime.year # starting time mon = datetime.month # starting time day = datetime.day # starting time # first occurrence (starting in 2010) start = ((yea-2010)*year_mult + mon + day/30.) * month_interval # recursion start += recur *year_mult

For the other parameters, we selected characteristics that gave us some expressive possibilities. The pitch of each rock is based on the orbit's angle (i_deg), the instruments are based on the orbit_class, the duration on the q_au_1 (which we have no idea what it actually represents). For the scale of this score, we chose a minor B flat, in reference to the sound of a black hole and the "lowest note in the universe".

INSTRUMENTS

by Katya R.

The first three corresponded to the three most common occurring meteors and asteroids. These are subtle "pluck" sounds. A pluck in CSound produces naturally decaying plucked string sounds.

The last six instruments consisted of louder, higher frequency styles.

Instrument four is a simple oscillator.

Instrument five, six, and eight are VCO, analog modeled oscillators, with a sawtooth frequency waveform.

Instrument seven is a VCO with a square frequency waveform.

Instrument nine is a VCO with a triangle frequency waveform.

linseg is an attribute we used to add some vibrato to instruments 6 - 9. It traces a series of line segments between specified points. These units generate control or audio signals whose values can pass through 2 or more specified points.

Each instrument's a-rate takes variables p4, p5, and p6, (which we set to frequency, amplitude, and pan) that correspond to values found in the JSON file under each instance of a meteor/asteroid near Earth. The result is a series of plucking sounds with intermittent louder and higher frequency sounds with some vibrato. The former represent to the more common smaller meteors and asteroids and the latter represent the rare asteroid and meteor types.

Poetry/code in motion ~ Photo by Katya R.

Description of our code by Nicolás E. ~ See the full project on GitHub here